On this tutorial, we discover superior pc imaginative and prescient strategies utilizing TorchVision’s v2 transforms, fashionable augmentation methods, and highly effective coaching enhancements. We stroll by means of the method of constructing an augmentation pipeline, making use of MixUp and CutMix, designing a contemporary CNN with consideration, and implementing a strong coaching loop. By working every thing seamlessly in Google Colab, we place ourselves to know and apply state-of-the-art practices in deep studying with readability and effectivity. Try the FULL CODES here.

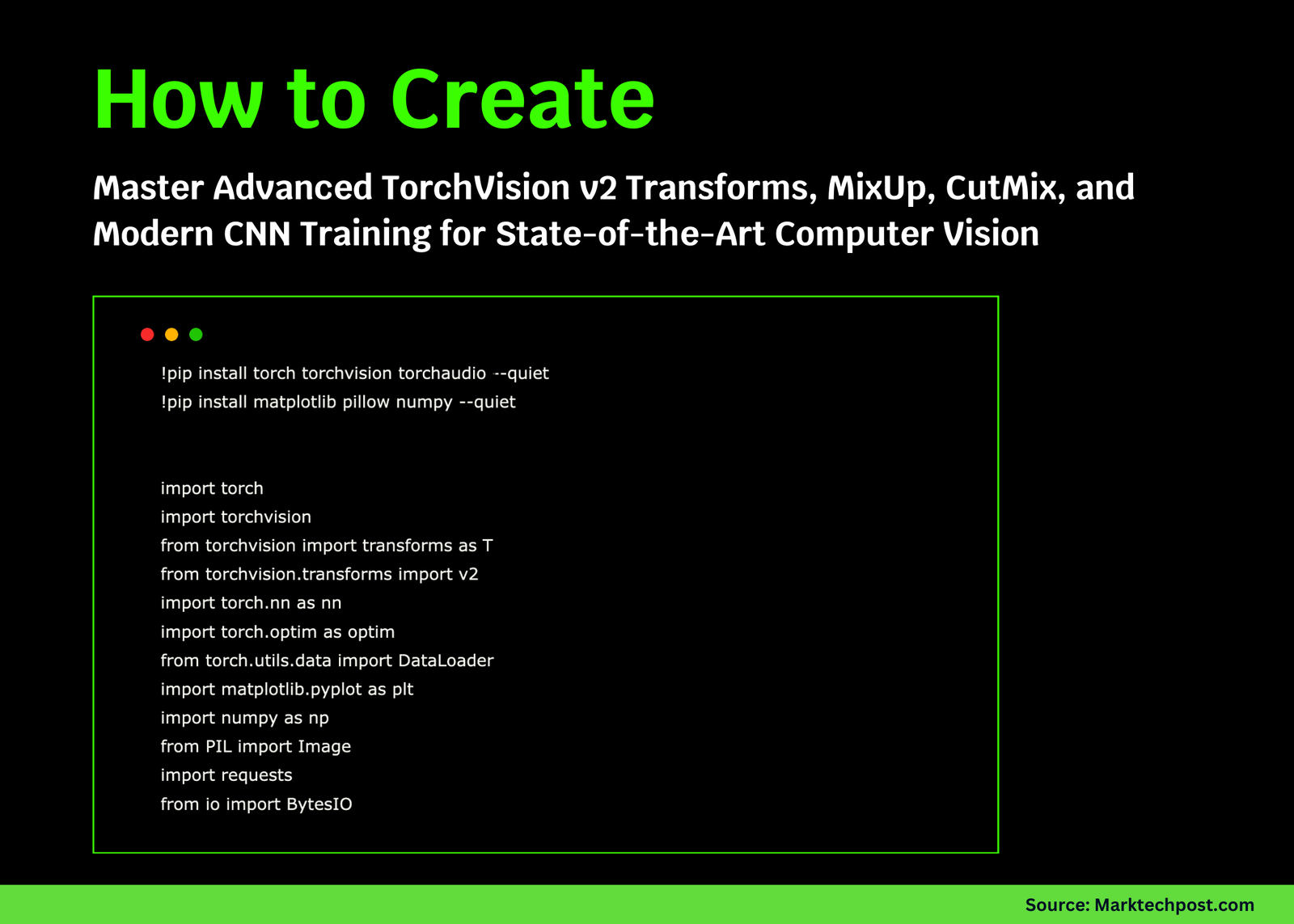

!pip set up torch torchvision torchaudio --quiet

!pip set up matplotlib pillow numpy --quiet

import torch

import torchvision

from torchvision import transforms as T

from torchvision.transforms import v2

import torch.nn as nn

import torch.optim as optim

from torch.utils.knowledge import DataLoader

import matplotlib.pyplot as plt

import numpy as np

from PIL import Picture

import requests

from io import BytesIO

print(f"PyTorch model: {torch.__version__}")

print(f"TorchVision model: {torchvision.__version__}")We start by putting in the libraries and importing all of the important modules for our workflow. We arrange PyTorch, TorchVision v2 transforms, and supporting instruments like NumPy, PIL, and Matplotlib, so we’re able to construct and take a look at superior pc imaginative and prescient pipelines. Try the FULL CODES here.

class AdvancedAugmentationPipeline:

def __init__(self, image_size=224, coaching=True):

self.image_size = image_size

self.coaching = coaching

base_transforms = [

v2.ToImage(),

v2.ToDtype(torch.uint8, scale=True),

]

if coaching:

self.rework = v2.Compose([

*base_transforms,

v2.Resize((image_size + 32, image_size + 32)),

v2.RandomResizedCrop(image_size, scale=(0.8, 1.0), ratio=(0.9, 1.1)),

v2.RandomHorizontalFlip(p=0.5),

v2.RandomRotation(degrees=15),

v2.ColorJitter(brights=0.4, contst=0.4, sation=0.4, hue=0.1),

v2.RandomGrayscale(p=0.1),

v2.GaussianBlur(kernel_size=3, sigma=(0.1, 2.0)),

v2.RandomPerspective(distortion_scale=0.1, p=0.3),

v2.RandomAffine(degrees=10, translate=(0.1, 0.1), scale=(0.9, 1.1)),

v2.ToDtype(torch.float32, scale=True),

v2.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

else:

self.rework = v2.Compose([

*base_transforms,

v2.Resize((image_size, image_size)),

v2.ToDtype(torch.float32, scale=True),

v2.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

def __call__(self, picture):

return self.rework(picture)We outline a sophisticated augmentation pipeline that adapts to each coaching and validation modes. We apply highly effective TorchVision v2 transforms, comparable to cropping, flipping, colour jittering, blurring, perspective, and affine transformations, throughout coaching, whereas preserving validation preprocessing easy with resizing and normalization. This manner, we be sure that we enrich the coaching knowledge for higher generalization whereas sustaining constant and secure analysis. Try the FULL CODES here.

class AdvancedMixupCutmix:

def __init__(self, mixup_alpha=1.0, cutmix_alpha=1.0, prob=0.5):

self.mixup_alpha = mixup_alpha

self.cutmix_alpha = cutmix_alpha

self.prob = prob

def mixup(self, x, y):

batch_size = x.dimension(0)

lam = np.random.beta(self.mixup_alpha, self.mixup_alpha) if self.mixup_alpha > 0 else 1

index = torch.randperm(batch_size)

mixed_x = lam * x + (1 - lam) * x[index, :]

y_a, y_b = y, y[index]

return mixed_x, y_a, y_b, lam

def cutmix(self, x, y):

batch_size = x.dimension(0)

lam = np.random.beta(self.cutmix_alpha, self.cutmix_alpha) if self.cutmix_alpha > 0 else 1

index = torch.randperm(batch_size)

y_a, y_b = y, y[index]

bbx1, bby1, bbx2, bby2 = self._rand_bbox(x.dimension(), lam)

x[:, :, bbx1:bbx2, bby1:bby2] = x[index, :, bbx1:bbx2, bby1:bby2]

lam = 1 - ((bbx2 - bbx1) * (bby2 - bby1) / (x.dimension()[-1] * x.dimension()[-2]))

return x, y_a, y_b, lam

def _rand_bbox(self, dimension, lam):

W = dimension[2]

H = dimension[3]

cut_rat = np.sqrt(1. - lam)

cut_w = int(W * cut_rat)

cut_h = int(H * cut_rat)

cx = np.random.randint(W)

cy = np.random.randint(H)

bbx1 = np.clip(cx - cut_w // 2, 0, W)

bby1 = np.clip(cy - cut_h // 2, 0, H)

bbx2 = np.clip(cx + cut_w // 2, 0, W)

bby2 = np.clip(cy + cut_h // 2, 0, H)

return bbx1, bby1, bbx2, bby2

def __call__(self, x, y):

if np.random.random() > self.prob:

return x, y, y, 1.0

if np.random.random() < 0.5:

return self.mixup(x, y)

else:

return self.cutmix(x, y)

class ModernCNN(nn.Module):

def __init__(self, num_classes=10, dropout=0.3):

tremendous(ModernCNN, self).__init__()

self.conv1 = self._conv_block(3, 64)

self.conv2 = self._conv_block(64, 128, downsample=True)

self.conv3 = self._conv_block(128, 256, downsample=True)

self.conv4 = self._conv_block(256, 512, downsample=True)

self.hole = nn.AdaptiveAvgPool2d(1)

self.consideration = nn.Sequential(

nn.Linear(512, 256),

nn.ReLU(),

nn.Linear(256, 512),

nn.Sigmoid()

)

self.classifier = nn.Sequential(

nn.Dropout(dropout),

nn.Linear(512, 256),

nn.BatchNorm1d(256),

nn.ReLU(),

nn.Dropout(dropout/2),

nn.Linear(256, num_classes)

)

def _conv_block(self, in_channels, out_channels, downsample=False):

stride = 2 if downsample else 1

return nn.Sequential(

nn.Conv2d(in_channels, out_channels, 3, stride=stride, padding=1),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True),

nn.Conv2d(out_channels, out_channels, 3, padding=1),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True)

)

def ahead(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.hole(x)

x = torch.flatten(x, 1)

attention_weights = self.consideration(x)

x = x * attention_weights

return self.classifier(x)We strengthen our coaching with a unified MixUp/CutMix module, the place we stochastically mix photographs or patch-swap areas and compute label interpolation with the precise pixel ratio. We pair this with a contemporary CNN that stacks progressive conv blocks, applies world common pooling, and makes use of a realized consideration gate earlier than a dropout-regularized classifier, so we enhance generalization whereas preserving inference easy. Try the FULL CODES here.

class AdvancedTrainer:

def __init__(self, mannequin, machine="cuda" if torch.cuda.is_available() else 'cpu'):

self.mannequin = mannequin.to(machine)

self.machine = machine

self.mixup_cutmix = AdvancedMixupCutmix()

self.optimizer = optim.AdamW(mannequin.parameters(), lr=1e-3, weight_decay=1e-4)

self.scheduler = optim.lr_scheduler.OneCycleLR(

self.optimizer, max_lr=1e-2, epochs=10, steps_per_epoch=100

)

self.criterion = nn.CrossEntropyLoss()

def mixup_criterion(self, pred, y_a, y_b, lam):

return lam * self.criterion(pred, y_a) + (1 - lam) * self.criterion(pred, y_b)

def train_epoch(self, dataloader):

self.mannequin.practice()

total_loss = 0

appropriate = 0

complete = 0

for batch_idx, (knowledge, goal) in enumerate(dataloader):

knowledge, goal = knowledge.to(self.machine), goal.to(self.machine)

knowledge, target_a, target_b, lam = self.mixup_cutmix(knowledge, goal)

self.optimizer.zero_grad()

output = self.mannequin(knowledge)

if lam != 1.0:

loss = self.mixup_criterion(output, target_a, target_b, lam)

else:

loss = self.criterion(output, goal)

loss.backward()

torch.nn.utils.clip_grad_norm_(self.mannequin.parameters(), max_norm=1.0)

self.optimizer.step()

self.scheduler.step()

total_loss += loss.merchandise()

_, predicted = output.max(1)

complete += goal.dimension(0)

if lam != 1.0:

appropriate += (lam * predicted.eq(target_a).sum().merchandise() +

(1 - lam) * predicted.eq(target_b).sum().merchandise())

else:

appropriate += predicted.eq(goal).sum().merchandise()

return total_loss / len(dataloader), 100. * appropriate / completeWe orchestrate coaching with AdamW, OneCycleLR, and dynamic MixUp/CutMix so we stabilize optimization and increase generalization. We compute an interpolated loss when mixing, clip gradients for security, and step the scheduler every batch, so we monitor loss/accuracy per epoch in a single tight loop. Try the FULL CODES here.

def demo_advanced_techniques():

batch_size = 16

num_classes = 10

sample_data = torch.randn(batch_size, 3, 224, 224)

sample_labels = torch.randint(0, num_classes, (batch_size,))

transform_pipeline = AdvancedAugmentationPipeline(coaching=True)

mannequin = ModernCNN(num_classes=num_classes)

coach = AdvancedTrainer(mannequin)

print("🚀 Superior Deep Studying Tutorial Demo")

print("=" * 50)

print("n1. Superior Augmentation Pipeline:")

augmented = transform_pipeline(Picture.fromarray((sample_data[0].permute(1,2,0).numpy() * 255).astype(np.uint8)))

print(f" Unique form: {sample_data[0].form}")

print(f" Augmented form: {augmented.form}")

print(f" Utilized transforms: Resize, Crop, Flip, ColorJitter, Blur, Perspective, and so forth.")

print("n2. MixUp/CutMix Augmentation:")

mixup_cutmix = AdvancedMixupCutmix()

mixed_data, target_a, target_b, lam = mixup_cutmix(sample_data, sample_labels)

print(f" Blended batch form: {mixed_data.form}")

print(f" Lambda worth: {lam:.3f}")

print(f" Method: {'MixUp' if lam > 0.7 else 'CutMix'}")

print("n3. Trendy CNN Structure:")

mannequin.eval()

with torch.no_grad():

output = mannequin(sample_data)

print(f" Enter form: {sample_data.form}")

print(f" Output form: {output.form}")

print(f" Options: Residual blocks, Consideration, International Common Pooling")

print(f" Parameters: {sum(p.numel() for p in mannequin.parameters()):,}")

print("n4. Superior Coaching Simulation:")

dummy_loader = [(sample_data, sample_labels)]

loss, acc = coach.train_epoch(dummy_loader)

print(f" Coaching loss: {loss:.4f}")

print(f" Coaching accuracy: {acc:.2f}%")

print(f" Studying fee: {coach.scheduler.get_last_lr()[0]:.6f}")

print("n✅ Tutorial accomplished efficiently!")

print("This code demonstrates state-of-the-art strategies in deep studying:")

print("• Superior knowledge augmentation with TorchVision v2")

print("• MixUp and CutMix for higher generalization")

print("• Trendy CNN structure with consideration")

print("• Superior coaching loop with OneCycleLR")

print("• Gradient clipping and weight decay")

if __name__ == "__main__":

demo_advanced_techniques()We run a compact end-to-end demo the place we visualize our augmentation pipeline, apply MixUp/CutMix, and double-check the ModernCNN with a ahead move. We then simulate one coaching epoch on dummy knowledge to confirm loss, accuracy, and learning-rate scheduling, so we verify the total stack works earlier than scaling to an actual dataset.

In conclusion, we’ve efficiently developed and examined a complete workflow that integrates superior augmentations, modern CNN design, and fashionable coaching methods. By experimenting with TorchVision v2, MixUp, CutMix, consideration mechanisms, and OneCycleLR, we not solely strengthen mannequin efficiency but additionally deepen our understanding of cutting-edge strategies.

Try the FULL CODES here. Be at liberty to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.