On this tutorial, we construct a self-verifying DataOps AIAgent that may plan, execute, and check information operations robotically utilizing native Hugging Face fashions. We design the agent with three clever roles: a Planner that creates an execution technique, an Executor that writes and runs code utilizing pandas, and a Tester that validates the outcomes for accuracy and consistency. Through the use of Microsoft’s Phi-2 mannequin regionally in Google Colab, we be sure that the workflow stays environment friendly, reproducible, and privacy-preserving whereas demonstrating how LLMs can automate advanced data-processing duties end-to-end. Take a look at the FULL CODES here.

!pip set up -q transformers speed up bitsandbytes scipy

import json, pandas as pd, numpy as np, torch

from transformers import AutoTokenizer, AutoModelForCausalLM, pipeline, BitsAndBytesConfig

MODEL_NAME = "microsoft/phi-2"

class LocalLLM:

def __init__(self, model_name=MODEL_NAME, use_8bit=False):

print(f"Loading mannequin: {model_name}")

self.tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

if self.tokenizer.pad_token is None:

self.tokenizer.pad_token = self.tokenizer.eos_token

model_kwargs = {"device_map": "auto", "trust_remote_code": True}

if use_8bit and torch.cuda.is_available():

model_kwargs["quantization_config"] = BitsAndBytesConfig(load_in_8bit=True)

else:

model_kwargs["torch_dtype"] = torch.float32 if not torch.cuda.is_available() else torch.float16

self.mannequin = AutoModelForCausalLM.from_pretrained(model_name, **model_kwargs)

self.pipe = pipeline("text-generation", mannequin=self.mannequin, tokenizer=self.tokenizer,

max_new_tokens=512, do_sample=True, temperature=0.3, top_p=0.9,

pad_token_id=self.tokenizer.eos_token_id)

print("✓ Mannequin loaded efficiently!n")

def generate(self, immediate, system_prompt="", temperature=0.3):

if system_prompt:

full_prompt = f"Instruct: {system_prompt}nn{immediate}nOutput:"

else:

full_prompt = f"Instruct: {immediate}nOutput:"

output = self.pipe(full_prompt, temperature=temperature, do_sample=temperature>0,

return_full_text=False, eos_token_id=self.tokenizer.eos_token_id)

outcome = output[0]['generated_text'].strip()

if "Instruct:" in outcome:

outcome = outcome.break up("Instruct:")[0].strip()

return outcomeWe set up the required libraries and cargo the Phi-2 mannequin regionally utilizing Hugging Face Transformers. We create a LocalLLM class that initializes the tokenizer and mannequin, helps non-obligatory quantization, and defines a generate technique to provide textual content outputs. We be sure that the mannequin runs easily on each CPU and GPU, making it supreme to be used on Colab. Take a look at the FULL CODES here.

PLANNER_PROMPT = """You're a Information Operations Planner. Create an in depth execution plan as legitimate JSON.

Return ONLY a JSON object (no different textual content) with this construction:

{"steps": ["step 1","step 2"],"expected_output":"description","validation_criteria":["criteria 1","criteria 2"]}"""

EXECUTOR_PROMPT = """You're a Information Operations Executor. Write Python code utilizing pandas.

Necessities:

- Use pandas (imported as pd) and numpy (imported as np)

- Retailer closing lead to variable 'outcome'

- Return ONLY Python code, no explanations or markdown"""

TESTER_PROMPT = """You're a Information Operations Tester. Confirm execution outcomes.

Return ONLY a JSON object (no different textual content) with this construction:

{"handed":true,"points":["any issues found"],"suggestions":["suggestions"]}"""

class DataOpsAgent:

def __init__(self, llm=None):

self.llm = llm or LocalLLM()

self.historical past = []

def _extract_json(self, textual content):

attempt:

return json.hundreds(textual content)

besides:

begin, finish = textual content.discover('{'), textual content.rfind('}')+1

if begin >= 0 and finish > begin:

attempt:

return json.hundreds(textual content[start:end])

besides:

cross

return NoneWe outline the system prompts for the Planner, Executor, and Tester roles of our DataOps Agent. We then initialize the DataOpsAgent class with helper strategies and a JSON extraction utility to parse structured responses. We put together the inspiration for the agent’s reasoning and execution pipeline. Take a look at the FULL CODES here.

def plan(self, process, data_info):

print("n" + "="*60)

print("PHASE 1: PLANNING")

print("="*60)

immediate = f"Process: {process}nnData Info:n{data_info}nnCreate an execution plan as JSON with steps, expected_output, and validation_criteria."

plan_text = self.llm.generate(immediate, PLANNER_PROMPT, temperature=0.2)

self.historical past.append(("PLANNER", plan_text))

plan = self._extract_json(plan_text) or {"steps":[task],"expected_output":"Processed information","validation_criteria":["Result generated","No errors"]}

print(f"n📋 Plan Created:")

print(f" Steps: {len(plan.get('steps', []))}")

for i, step in enumerate(plan.get('steps', []), 1):

print(f" {i}. {step}")

print(f" Anticipated: {plan.get('expected_output', 'N/A')}")

return plan

def execute(self, plan, data_context):

print("n" + "="*60)

print("PHASE 2: EXECUTION")

print("="*60)

steps_text="n".be a part of(f"{i}. {s}" for i, s in enumerate(plan.get('steps', []), 1))

immediate = f"Process Steps:n{steps_text}nnData out there: DataFrame 'df'n{data_context}nnWrite Python code to execute these steps. Retailer closing lead to 'outcome' variable."

code = self.llm.generate(immediate, EXECUTOR_PROMPT, temperature=0.1)

self.historical past.append(("EXECUTOR", code))

if "```python" in code: code = code.break up("```python")[1].break up("```")[0]

elif "```" in code: code = code.break up("```")[1].break up("```")[0]

strains = []

for line in code.break up('n'):

s = line.strip()

if s and (not s.startswith('#') or 'import' in s):

strains.append(line)

code="n".be a part of(strains).strip()

print(f"n💻 Generated Code:n" + "-"*60)

for i, line in enumerate(code.break up('n')[:15],1):

print(f"{i:2}. {line}")

if len(code.break up('n'))>15: print(f" ... ({len(code.break up('n'))-15} extra strains)")

print("-"*60)

return codeWe implement the Planning and Execution phases of the agent. We let the Planner create detailed process steps and validation standards, after which the Executor generates corresponding Python code primarily based on pandas to carry out the duty. We visualize how the agent autonomously transitions from reasoning to producing actionable code. Take a look at the FULL CODES here.

def check(self, plan, outcome, execution_error=None):

print("n" + "="*60)

print("PHASE 3: TESTING & VERIFICATION")

print("="*60)

result_desc = f"EXECUTION ERROR: {execution_error}" if execution_error else f"End result kind: {kind(outcome).__name__}n"

if not execution_error:

if isinstance(outcome, pd.DataFrame):

result_desc += f"Form: {outcome.form}nColumns: {listing(outcome.columns)}nSample:n{outcome.head(3).to_string()}"

elif isinstance(outcome, (int,float,str)):

result_desc += f"Worth: {outcome}"

else:

result_desc += f"Worth: {str(outcome)[:200]}"

criteria_text="n".be a part of(f"- {c}" for c in plan.get('validation_criteria', []))

immediate = f"Validation Standards:n{criteria_text}nnExpected: {plan.get('expected_output', 'N/A')}nnActual End result:n{result_desc}nnEvaluate if outcome meets standards. Return JSON with handed (true/false), points, and proposals."

test_result = self.llm.generate(immediate, TESTER_PROMPT, temperature=0.2)

self.historical past.append(("TESTER", test_result))

test_json = self._extract_json(test_result) or {"handed":execution_error is None,"points":["Could not parse test result"],"suggestions":["Review manually"]}

print(f"n✓ Check Outcomes:n Standing: {'✅ PASSED' if test_json.get('handed') else '❌ FAILED'}")

if test_json.get('points'):

print(" Points:")

for concern in test_json['issues'][:3]:

print(f" • {concern}")

if test_json.get('suggestions'):

print(" Suggestions:")

for rec in test_json['recommendations'][:3]:

print(f" • {rec}")

return test_json

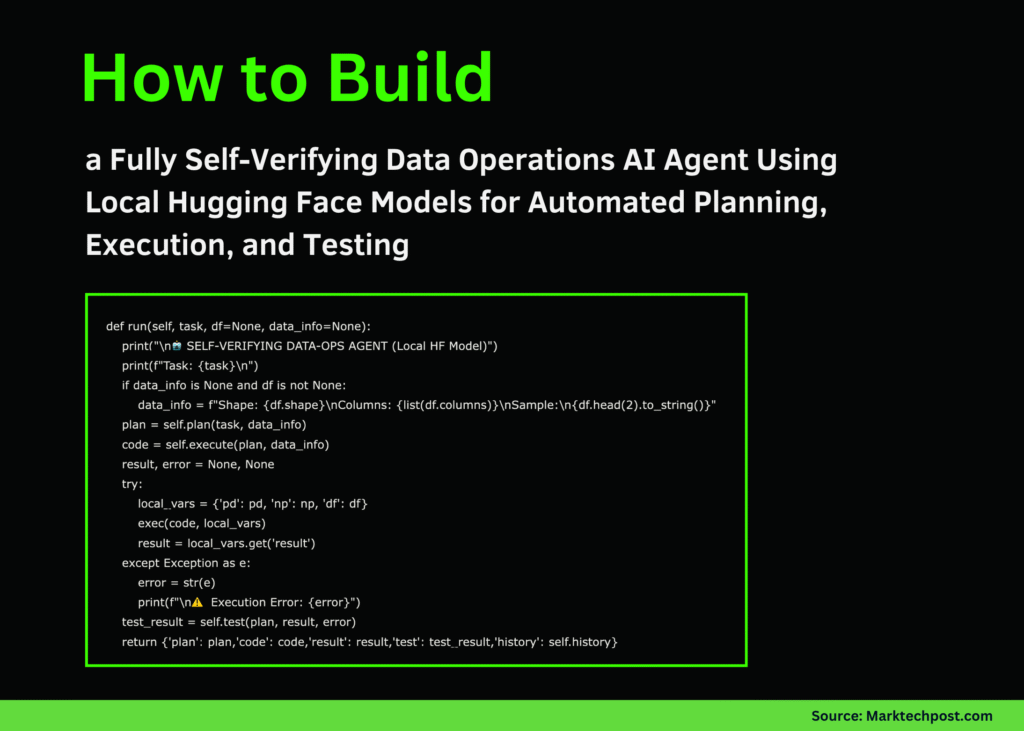

def run(self, process, df=None, data_info=None):

print("n🤖 SELF-VERIFYING DATA-OPS AGENT (Native HF Mannequin)")

print(f"Process: {process}n")

if data_info is None and df shouldn't be None:

data_info = f"Form: {df.form}nColumns: {listing(df.columns)}nSample:n{df.head(2).to_string()}"

plan = self.plan(process, data_info)

code = self.execute(plan, data_info)

outcome, error = None, None

attempt:

local_vars = {'pd': pd, 'np': np, 'df': df}

exec(code, local_vars)

outcome = local_vars.get('outcome')

besides Exception as e:

error = str(e)

print(f"n⚠️ Execution Error: {error}")

test_result = self.check(plan, outcome, error)

return {'plan': plan,'code': code,'outcome': outcome,'check': test_result,'historical past': self.historical past}We deal with the Testing and Verification section of our workflow. We let the agent consider its personal output towards predefined validation standards and summarize the end result as a structured JSON. We then combine all three phases, planning, execution, and testing, right into a single self-verifying pipeline that ensures full automation. Take a look at the FULL CODES here.

def demo_basic(agent):

print("n" + "#"*60)

print("# DEMO 1: Gross sales Information Aggregation")

print("#"*60)

df = pd.DataFrame({'product':['A','B','A','C','B','A','C'],

'gross sales':[100,150,200,80,130,90,110],

'area':['North','South','North','East','South','West','East']})

process = "Calculate complete gross sales by product"

output = agent.run(process, df)

if output['result'] shouldn't be None:

print(f"n📊 Remaining End result:n{output['result']}")

return output

def demo_advanced(agent):

print("n" + "#"*60)

print("# DEMO 2: Buyer Age Evaluation")

print("#"*60)

df = pd.DataFrame({'customer_id':vary(1,11),

'age':[25,34,45,23,56,38,29,41,52,31],

'purchases':[5,12,8,3,15,7,9,11,6,10],

'spend':[500,1200,800,300,1500,700,900,1100,600,1000]})

process = "Calculate common spend by age group: younger (beneath 35) and mature (35+)"

output = agent.run(process, df)

if output['result'] shouldn't be None:

print(f"n📊 Remaining End result:n{output['result']}")

return output

if __name__ == "__main__":

print("🚀 Initializing Native LLM...")

print("Utilizing CPU mode for optimum compatibilityn")

attempt:

llm = LocalLLM(use_8bit=False)

agent = DataOpsAgent(llm)

demo_basic(agent)

print("nn")

demo_advanced(agent)

print("n" + "="*60)

print("✅ Tutorial Full!")

print("="*60)

print("nKey Options:")

print(" • 100% Native - No API calls required")

print(" • Makes use of Phi-2 from Microsoft (2.7B params)")

print(" • Self-verifying 3-phase workflow")

print(" • Runs on free Google Colab CPU/GPU")

besides Exception as e:

print(f"n❌ Error: {e}")

print("Troubleshooting:n1. pip set up -q transformers speed up scipyn2. Restart runtimen3. Attempt a unique mannequin")We constructed two demo examples to check the agent’s capabilities utilizing easy gross sales and buyer datasets. We initialize the mannequin, execute the Information-Ops workflow, and observe the complete cycle from planning to validation. We conclude the tutorial by summarizing key advantages and inspiring additional experimentation with native fashions.

In conclusion, we created a completely autonomous and self-verifying DataOps system powered by a neighborhood Hugging Face mannequin. We expertise how every stage, planning, execution, and testing, seamlessly interacts to provide dependable outcomes with out counting on any cloud APIs. This workflow highlights the energy of native LLMs, comparable to Phi-2, for light-weight automation and conjures up us to develop this structure for extra superior information pipelines, validation frameworks, and multi-agent information techniques sooner or later.

Take a look at the FULL CODES here. Be happy to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be happy to observe us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.