On this tutorial, we discover how an agent can internalize planning, reminiscence, and gear use inside a single neural mannequin reasonably than counting on exterior orchestration. We design a compact, model-native agent that learns to carry out arithmetic reasoning duties by reinforcement studying. By combining a stage-aware actor-critic community with a curriculum of more and more advanced environments, we allow the agent to find how you can use internalized “instruments” and short-term reminiscence to achieve right options end-to-end. We work step-by-step to look at how studying evolves from easy reasoning to multi-step compositional habits. Try the FULL CODES here.

import math, random, torch, torch.nn as nn, torch.nn.practical as F

machine = "cuda" if torch.cuda.is_available() else "cpu"; torch.manual_seed(0); random.seed(0)

V = 18; CTX = 10; MUL, ADD, SUB, ANS, STO, RCL, EOS = 11, 12, 13, 14, 15, 16, 17

tok2str = {**{i: str(i) for i in vary(10)}, CTX:"[CTX]", MUL:"[MUL]", ADD:"[ADD]", SUB:"[SUB]", ANS:"[ANS]", STO:"[STO]", RCL:"[RCL]", EOS:"[EOS]"}

class ToolEnv:

def __init__(self, max_steps=7):

self.max_steps = max_steps

def pattern(self, stage):

a,b,c,d,e = [random.randint(0,9) for _ in range(5)]

if stage==0: ctx=[a,b,c]; goal=a*b+c

elif stage==1: ctx=[a,b,c,d]; goal=(a*b+c)-d

else: ctx=[a,b,c,d,e]; goal=(a*b+c)-(d*e)

return ctx, goal, (a,b,c,d,e)

def step_seq(self, actions, abc, stage):

a,b,c,d,e = abc; final=None; mem=None; steps=0; formed=0.0

goal0=a*b; goal1=goal0+c; goal2=goal1-d; goal3=d*e; goal4=goal1-goal3

for act in actions:

steps+=1

if act==MUL: final=(a*b if final is None else final*(d if stage>0 else 1))

elif act==ADD and final just isn't None: final+=c

elif act==SUB and final just isn't None:

final -= (e if stage==2 and mem=="use_d" else (d if stage>0 else 0))

elif act==STO: mem="use_d" if stage>=1 else "okay"

elif act==RCL and mem just isn't None:

final = (d*e) if (stage==2 and mem=="use_d") else (final if final else 0)

elif act==ANS:

goal=[goal0,goal1,goal2,goal4][stage] if stage==2 else [goal0,goal1,goal2][stage]

right=(final==goal)

if stage==0: formed += 0.25*(final==goal0)+0.5*(final==goal1)

if stage==1: formed += 0.25*(final==goal0)+0.5*(final==goal1)+0.75*(final==goal2)

if stage==2: formed += 0.2*(final==goal0)+0.4*(final==goal1)+0.6*(final==goal4)+0.6*(final==goal3)

return (1.0 if right else 0.0)+0.2*formed, steps

if steps>=self.max_steps: break

return 0.0, stepsWe start by establishing the setting and defining the symbolic instruments our agent can use. We create a small artificial world the place every motion, resembling multiplication, addition, or subtraction, acts as an inside software. This setting permits us to simulate reasoning duties through which the agent should plan sequences of software use to reach on the right reply. Try the FULL CODES here.

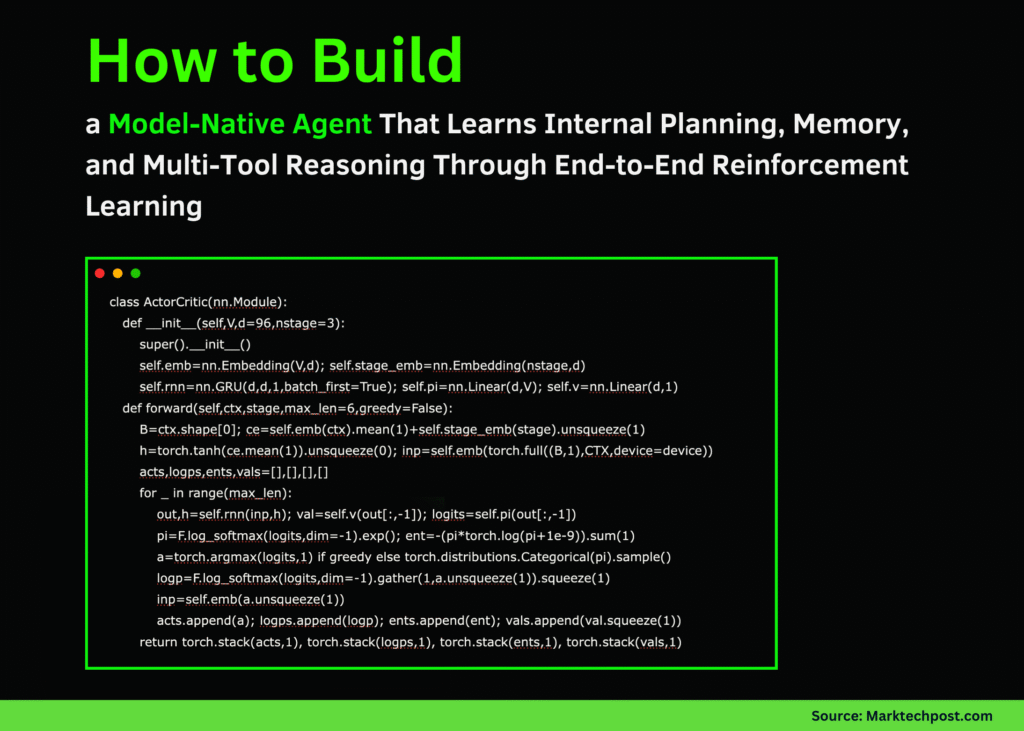

class ActorCritic(nn.Module):

def __init__(self,V,d=96,nstage=3):

tremendous().__init__()

self.emb=nn.Embedding(V,d); self.stage_emb=nn.Embedding(nstage,d)

self.rnn=nn.GRU(d,d,1,batch_first=True); self.pi=nn.Linear(d,V); self.v=nn.Linear(d,1)

def ahead(self,ctx,stage,max_len=6,grasping=False):

B=ctx.form[0]; ce=self.emb(ctx).imply(1)+self.stage_emb(stage).unsqueeze(1)

h=torch.tanh(ce.imply(1)).unsqueeze(0); inp=self.emb(torch.full((B,1),CTX,machine=machine))

acts,logps,ents,vals=[],[],[],[]

for _ in vary(max_len):

out,h=self.rnn(inp,h); val=self.v(out[:,-1]); logits=self.pi(out[:,-1])

pi=F.log_softmax(logits,dim=-1).exp(); ent=-(pi*torch.log(pi+1e-9)).sum(1)

a=torch.argmax(logits,1) if grasping else torch.distributions.Categorical(pi).pattern()

logp=F.log_softmax(logits,dim=-1).collect(1,a.unsqueeze(1)).squeeze(1)

inp=self.emb(a.unsqueeze(1))

acts.append(a); logps.append(logp); ents.append(ent); vals.append(val.squeeze(1))

return torch.stack(acts,1), torch.stack(logps,1), torch.stack(ents,1), torch.stack(vals,1)We then design our model-native coverage utilizing an actor-critic construction constructed round a GRU. We embed each tokens and process levels, permitting the community to adapt its reasoning depth in line with process complexity. This setup permits the agent to be taught contextually when and how you can use inside instruments inside a single unified mannequin. Try the FULL CODES here.

env=ToolEnv(); internet=ActorCritic(V).to(machine)

decide=torch.optim.Adam(internet.parameters(),lr=3e-4)

def pad_batch(ctxs):

L=max(len(c)+1 for c in ctxs)

out=torch.full((len(ctxs),L),EOS,dtype=torch.lengthy,machine=machine)

for i,c in enumerate(ctxs): out[i,:len(c)+1]=torch.tensor(c+[CTX],machine=machine)

return out

def run_batch(stage,batch=128,prepare=True,grasping=False):

ctxs=[]; metas=[]

for _ in vary(batch):

c,t,abc=env.pattern(stage); ctxs.append(c); metas.append((t,abc))

ctx=pad_batch(ctxs); stage_t=torch.full((batch,),stage,machine=machine,dtype=torch.lengthy)

acts,logps,ents,vals=internet(ctx,stage_t,max_len=6,grasping=grasping)

rewards=[]

for i in vary(batch):

traj = acts[i].tolist()

abc = metas[i][1]

r,_ = env.step_seq(traj,abc,stage)

rewards.append(r)

R=torch.tensor(rewards,machine=machine).float()

adv=(R-vals.sum(1)).detach()

if not prepare: return R.imply().merchandise(), 0.0

pg=-(logps.sum(1)*adv).imply(); vloss=F.mse_loss(vals.sum(1),R); ent=-ents.imply()

loss=pg+0.5*vloss+0.01*ent

decide.zero_grad(); loss.backward(); nn.utils.clip_grad_norm_(internet.parameters(),1.0); decide.step()

return R.imply().merchandise(), loss.merchandise()We implement the reinforcement studying coaching loop utilizing a bonus actor-critic (A2C) replace. We prepare the agent end-to-end throughout batches of artificial issues, updating coverage and worth networks concurrently. Right here, we incorporate entropy regularization to advertise exploration and forestall untimely convergence. Try the FULL CODES here.

print("Coaching…")

levels=[0,0,0,1,1,2]

for ep in vary(1,61):

stage=levels[min((ep-1)//10,len(stages)-1)]

acc,loss=run_batch(stage,batch=192,prepare=True)

if eppercent5==0:

with torch.no_grad():

evals=[run_batch(s,train=False,greedy=True)[0] for s in [0,1,2]]

print(f"ep={ep:02d} stage={stage} acc={acc:.3f} | eval T0={evals[0]:.3f} "

f"T1={evals[1]:.3f} T2={evals[2]:.3f} loss={loss:.3f}")We begin the principle coaching course of utilizing a curriculum technique the place duties regularly improve in issue. As we prepare, we consider the agent on all levels to look at its capability to generalize from easier to extra advanced reasoning steps. The printed metrics present how inside planning improves over time. Try the FULL CODES here.

def clarify(stage):

c,t,abc=env.pattern(stage)

ctx=pad_batch([c]); stage_t=torch.tensor([stage],machine=machine)

with torch.no_grad(): a,_,_,_=internet(ctx,stage_t,grasping=True)

seq=[tok2str[x] for x in a[0].tolist()]

r,_=env.step_seq(a[0].tolist(),abc,stage)

return dict(stage=stage,ctx=c,goal=t,actions=" ".be part of(seq),reward=spherical(float(r),2))

with torch.no_grad():

for s in [0,1,2]:

print(f"nStage {s} samples:")

for _ in vary(5): print(clarify(s))

with torch.no_grad():

finals=[run_batch(s,train=False,greedy=True,batch=1000)[0] for s in [0,1,2]]

print(f"nFinal grasping accuracies → T0={finals[0]:.3f}, T1={finals[1]:.3f}, T2={finals[2]:.3f}")We end by probing the educated agent and printing instance reasoning trajectories. We visualize the sequence of software tokens the mannequin chooses and confirm whether or not it reaches the right end result. Lastly, we consider the general efficiency, demonstrating that the mannequin efficiently integrates planning, reminiscence, and reasoning into an internalized course of.

In conclusion, we see that even a neural community can be taught internalized planning and tool-use behaviors when educated with reinforcement indicators. We efficiently transfer past conventional pipeline-style architectures, the place reminiscence, planning, and execution are separate, towards a model-native agent that integrates these parts as a part of its realized dynamics. This method represents a shift in agentic AI, demonstrating how end-to-end studying can produce emergent reasoning and self-organized decision-making with out the necessity for handcrafted management loops.

Try the FULL CODES here. Be at liberty to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.