On this tutorial, we stroll by way of a complicated end-to-end information science workflow the place we mix conventional machine studying with the facility of Gemini. We start by making ready and modeling the diabetes dataset, then we dive into analysis, function significance, and partial dependence. Alongside the way in which, we herald Gemini as our AI information scientist to elucidate outcomes, reply exploratory questions, and spotlight dangers. By doing this, we construct a predictive mannequin whereas additionally enhancing our insights and decision-making by way of pure language interplay. Try the FULL CODES here.

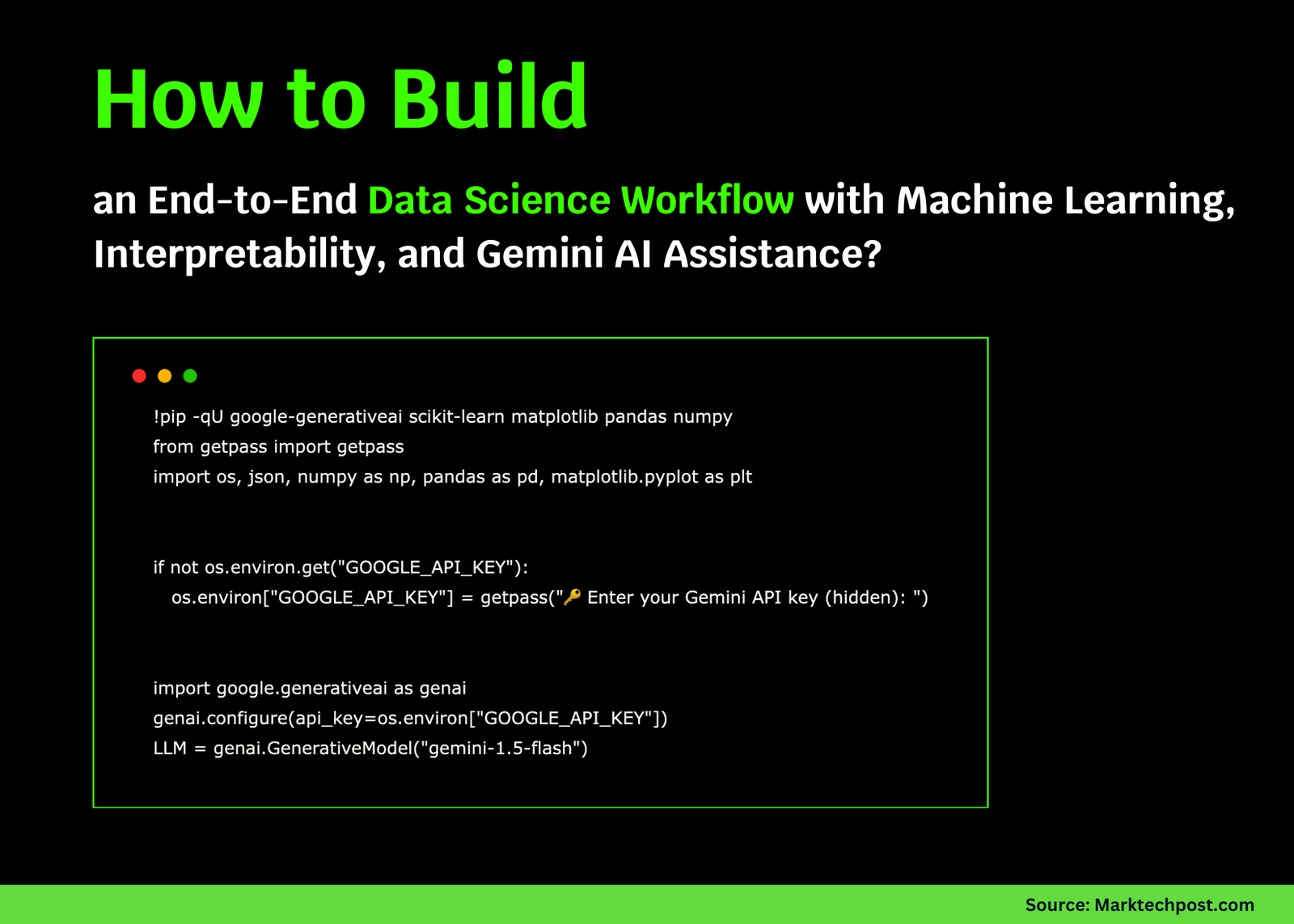

!pip -qU google-generativeai scikit-learn matplotlib pandas numpy

from getpass import getpass

import os, json, numpy as np, pandas as pd, matplotlib.pyplot as plt

if not os.environ.get("GOOGLE_API_KEY"):

os.environ["GOOGLE_API_KEY"] = getpass("🔑 Enter your Gemini API key (hidden): ")

import google.generativeai as genai

genai.configure(api_key=os.environ["GOOGLE_API_KEY"])

LLM = genai.GenerativeModel("gemini-1.5-flash")

def ask_llm(immediate, sys=None):

p = immediate if sys is None else f"System:n{sys}nnUser:n{immediate}"

r = LLM.generate_content(p)

return (getattr(r, "textual content", "") or "").strip()

from sklearn.datasets import load_diabetes

uncooked = load_diabetes(as_frame=True)

df = uncooked.body.rename(columns={"goal":"disease_progression"})

print("Form:", df.form); show(df.head())

from sklearn.model_selection import train_test_split, KFold, cross_val_score

from sklearn.compose import ColumnTransformer

from sklearn.preprocessing import StandardScaler, QuantileTransformer

from sklearn.ensemble import HistGradientBoostingRegressor

from sklearn.pipeline import Pipeline

X = df.drop(columns=["disease_progression"]); y = df["disease_progression"]

num_cols = X.columns.tolist()

pre = ColumnTransformer(

[("scale", StandardScaler(), num_cols),

("rank", QuantileTransformer(n_quantiles=min(200, len(X)), output_distribution="normal"), num_cols)],

the rest="drop", verbose_feature_names_out=False)

mannequin = HistGradientBoostingRegressor(max_depth=3, learning_rate=0.07,

l2_regularization=0.0, max_iter=500,

early_stopping=True, validation_fraction=0.15)

pipe = Pipeline([("prep", pre), ("hgbt", model)])

Xtr, Xte, ytr, yte = train_test_split(X, y, test_size=0.20, random_state=42)

cv = KFold(n_splits=5, shuffle=True, random_state=42)

cv_mse = -cross_val_score(pipe, Xtr, ytr, scoring="neg_mean_squared_error", cv=cv).imply()

cv_rmse = float(cv_mse ** 0.5)

pipe.match(Xtr, ytr)We load the diabetes dataset, preprocess the options, and construct a strong pipeline utilizing scaling, quantile transformation, and gradient boosting. We cut up the info, carry out cross-validation to estimate RMSE, after which match the ultimate mannequin to see how properly it generalizes. Try the FULL CODES here.

pred_tr = pipe.predict(Xtr); pred_te = pipe.predict(Xte)

rmse_tr = mean_squared_error(ytr, pred_tr) ** 0.5

rmse_te = mean_squared_error(yte, pred_te) ** 0.5

mae_te = mean_absolute_error(yte, pred_te)

r2_te = r2_score(yte, pred_te)

print(f"CV RMSE={cv_rmse:.2f} | Practice RMSE={rmse_tr:.2f} | Take a look at RMSE={rmse_te:.2f} | Take a look at MAE={mae_te:.2f} | R²={r2_te:.3f}")

plt.determine(figsize=(5,4))

plt.scatter(pred_te, yte - pred_te, s=12)

plt.axhline(0, lw=1); plt.xlabel("Predicted"); plt.ylabel("Residual"); plt.title("Residuals (Take a look at)")

plt.present()

from sklearn.inspection import permutation_importance

imp = permutation_importance(pipe, Xte, yte, scoring="neg_mean_squared_error", n_repeats=10, random_state=0)

imp_df = pd.DataFrame({"function": X.columns, "significance": imp.importances_mean}).sort_values("significance", ascending=False)

show(imp_df.head(10))

plt.determine(figsize=(6,4))

top10 = imp_df.head(10).iloc[::-1]

plt.barh(top10["feature"], top10["importance"])

plt.title("Permutation Significance (Prime 10)"); plt.xlabel("Δ(MSE)"); plt.tight_layout(); plt.present()We consider our mannequin by computing prepare, check, and cross-validation metrics, and visualize residuals to verify prediction errors. We then calculate permutation significance to establish which options drive the mannequin most, and show the highest contributors utilizing a transparent bar plot. Try the FULL CODES here.

def compute_pdp(pipe, Xref: pd.DataFrame, feat: str, grid=40):

xs = np.linspace(np.percentile(Xref[feat], 5), np.percentile(Xref[feat], 95), grid)

Xtmp = Xref.copy()

ys = []

for v in xs:

Xtmp[feat] = v

ys.append(pipe.predict(Xtmp).imply())

return xs, np.array(ys)

top_feats = imp_df["feature"].head(3).tolist()

plt.determine(figsize=(6,4))

for f in top_feats:

xs, ys = compute_pdp(pipe, Xte.copy(), f, grid=40)

plt.plot(xs, ys, label=f)

plt.legend(); plt.xlabel("Function worth"); plt.ylabel("Predicted goal"); plt.title("Handbook PDP (Prime 3)")

plt.tight_layout(); plt.present()

report_obj = {

"dataset": {"rows": int(df.form[0]), "cols": int(df.form[1]-1), "goal": "disease_progression"},

"metrics": {"cv_rmse": float(cv_rmse), "train_rmse": float(rmse_tr),

"test_rmse": float(rmse_te), "test_mae": float(mae_te), "r2": float(r2_te)},

"top_importances": imp_df.head(10).to_dict(orient="information")

}

print(json.dumps(report_obj, indent=2))

sys_msg = ("You're a senior information scientist. Return: (1) ≤120-word govt abstract, "

"(2) key dangers/assumptions bullets, (3) 5 prioritized subsequent experiments w/ rationale, "

"(4) quick-win function engineering concepts as Python pseudocode.")

abstract = ask_llm(f"Dataset + metrics + importances:n{json.dumps(report_obj)}", sys=sys_msg)

print("n📊 Gemini Government Briefn" + "-"*80 + f"n{abstract}n")We compute the guide partial dependence for the highest three options and visualize how altering each impacts the predictions. We then assemble a compact JSON report of dataset statistics, metrics, and importances, and ask Gemini to generate an govt transient that features dangers, subsequent experiments, and quick-win function engineering concepts. Try the FULL CODES here.

SAFE_GLOBALS = {"pd": pd, "np": np}

def run_generated_pandas(code: str, df_local: pd.DataFrame):

banned = ["__", "import", "open(", "exec(", "eval(", "os.", "sys.", "pd.read", "to_csv", "to_pickle", "to_sql"]

if any(b in code for b in banned): elevate ValueError("Unsafe code rejected.")

loc = {"df": df_local.copy()}

exec(code, SAFE_GLOBALS, loc)

return {ok:v for ok,v in loc.objects() if ok not in ("df",)}

def eda_qa(query: str):

immediate = f"""You're a Python+Pandas analyst. DataFrame `df` columns:

{record(df.columns)}. Write a SHORT pandas snippet (no feedback/prints) that computes the reply to:

"{query}". Use solely pd/np/df; assign the ultimate outcome to a variable named `reply`."""

code = ask_llm(immediate, sys="Return solely code. No prose.")

strive:

out = run_generated_pandas(code, df)

return code, out.get("reply", None)

besides Exception as e:

return code, f"[Execution error: {e}]"

questions = [

"What is the Pearson correlation between BMI and disease_progression?",

"Show mean target by tertiles of BMI (low/med/high).",

"Which single feature correlates most with the target (absolute value)?"

]

for q in questions:

code, ans = eda_qa(q)

print("nQ:", q, "nCode:n", code, "nAnswer:n", ans)We construct a protected sandbox to execute pandas code that Gemini generates for exploratory information evaluation. We then ask pure language questions on correlations and have relationships, let Gemini write the pandas snippets, and mechanically run them to get direct solutions from the dataset. Try the FULL CODES here.

crossitique = ask_llm(

f"""Metrics: {report_obj['metrics']}

Prime importances: {report_obj['top_importances']}

Determine dangers round leakage, overfitting, calibration, OOD robustness, and equity (even proxy-only).

Suggest fast checks (concise Python sketches)."""

)

print("n🧪 Gemini Danger & Robustness Reviewn" + "-"*80 + f"n{critique}n")

def what_if(pipe, Xref: pd.DataFrame, feat: str, delta: float = 0.05):

x0 = Xref.median(numeric_only=True).to_dict()

x1, x2 = x0.copy(), x0.copy()

if feat not in x1: return np.nan

x2[feat] = x1[feat] + delta

X1 = pd.DataFrame([x1], columns=X.columns)

X2 = pd.DataFrame([x2], columns=X.columns)

return float(pipe.predict(X2)[0] - pipe.predict(X1)[0])

for f in top_feats:

print(f"Estimated Δtarget if {f} will increase by +0.05 ≈ {what_if(pipe, Xte, f, 0.05):.2f}")

print("n✅ Accomplished: Practice → Clarify → Question with Gemini → Assessment dangers → What-if evaluation. "

"Swap the dataset or tweak mannequin params to increase this pocket book.")We ask Gemini to evaluate our mannequin for dangers like leakage, overfitting, and equity, and get fast Python checks as options. We then run easy “what-if” analyses to see how small modifications in prime options have an effect on predictions, serving to us interpret the mannequin’s habits extra clearly.

In conclusion, we see how seamlessly we will mix machine studying pipelines with Gemini’s reasoning to make information science extra interactive and insightful. We prepare, consider, and interpret a mannequin, then ask Gemini to summarize findings, recommend enhancements, and critique dangers. By way of this journey, we set up a workflow that permits us to realize each predictive efficiency and interpretability, whereas additionally benefiting from having an AI collaborator in our information evaluation course of.

Try the FULL CODES here. Be at liberty to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.