On this tutorial, we discover superior functions of Stable-Baselines3 in reinforcement studying. We design a completely useful, customized buying and selling atmosphere, combine a number of algorithms reminiscent of PPO and A2C, and develop our personal coaching callbacks for efficiency monitoring. As we progress, we prepare, consider, and visualize agent efficiency to match algorithmic effectivity, studying curves, and choice methods, all inside a streamlined workflow that runs solely offline. Try the FULL CODES here.

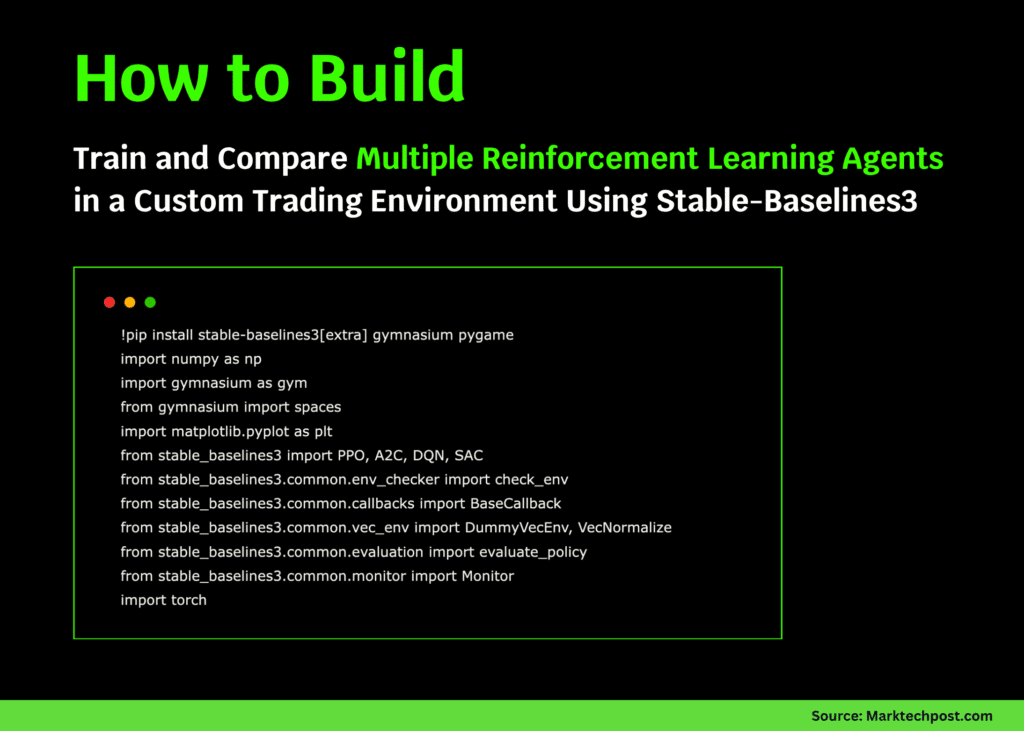

!pip set up stable-baselines3[extra] gymnasium pygame

import numpy as np

import gymnasium as gymnasium

from gymnasium import areas

import matplotlib.pyplot as plt

from stable_baselines3 import PPO, A2C, DQN, SAC

from stable_baselines3.widespread.env_checker import check_env

from stable_baselines3.widespread.callbacks import BaseCallback

from stable_baselines3.widespread.vec_env import DummyVecEnv, VecNormalize

from stable_baselines3.widespread.analysis import evaluate_policy

from stable_baselines3.widespread.monitor import Monitor

import torch

class TradingEnv(gymnasium.Env):

def __init__(self, max_steps=200):

tremendous().__init__()

self.max_steps = max_steps

self.action_space = areas.Discrete(3)

self.observation_space = areas.Field(low=-np.inf, excessive=np.inf, form=(5,), dtype=np.float32)

self.reset()

def reset(self, seed=None, choices=None):

tremendous().reset(seed=seed)

self.current_step = 0

self.steadiness = 1000.0

self.shares = 0

self.value = 100.0

self.price_history = [self.price]

return self._get_obs(), {}

def _get_obs(self):

price_trend = np.imply(self.price_history[-5:]) if len(self.price_history) >= 5 else self.value

return np.array([

self.balance / 1000.0,

self.shares / 10.0,

self.price / 100.0,

price_trend / 100.0,

self.current_step / self.max_steps

], dtype=np.float32)

def step(self, motion):

self.current_step += 1

development = 0.001 * np.sin(self.current_step / 20)

self.value *= (1 + development + np.random.regular(0, 0.02))

self.value = np.clip(self.value, 50, 200)

self.price_history.append(self.value)

reward = 0

if motion == 1 and self.steadiness >= self.value:

shares_to_buy = int(self.steadiness / self.value)

price = shares_to_buy * self.value

self.steadiness -= price

self.shares += shares_to_buy

reward = -0.01

elif motion == 2 and self.shares > 0:

income = self.shares * self.value

self.steadiness += income

self.shares = 0

reward = 0.01

portfolio_value = self.steadiness + self.shares * self.value

reward += (portfolio_value - 1000) / 1000

terminated = self.current_step >= self.max_steps

truncated = False

return self._get_obs(), reward, terminated, truncated, {"portfolio": portfolio_value}

def render(self):

print(f"Step: {self.current_step}, Steadiness: ${self.steadiness:.2f}, Shares: {self.shares}, Value: ${self.value:.2f}")We outline our customized TradingEnv, the place an agent learns to make purchase, promote, or maintain selections based mostly on simulated value actions. We outline the commentary and motion areas, implement the reward construction, and guarantee our surroundings displays a sensible market state of affairs with fluctuating traits and noise. Try the FULL CODES here.

class ProgressCallback(BaseCallback):

def __init__(self, check_freq=1000, verbose=1):

tremendous().__init__(verbose)

self.check_freq = check_freq

self.rewards = []

def _on_step(self):

if self.n_calls % self.check_freq == 0:

mean_reward = np.imply([ep_info["r"] for ep_info in self.mannequin.ep_info_buffer])

self.rewards.append(mean_reward)

if self.verbose:

print(f"Steps: {self.n_calls}, Imply Reward: {mean_reward:.2f}")

return True

print("=" * 60)

print("Organising customized buying and selling atmosphere...")

env = TradingEnv()

check_env(env, warn=True)

print("✓ Surroundings validation handed!")

env = Monitor(env)

vec_env = DummyVecEnv([lambda: env])

vec_env = VecNormalize(vec_env, norm_obs=True, norm_reward=True)Right here, we create a ProgressCallback to watch coaching progress and file imply rewards at common intervals. We then validate our customized atmosphere utilizing Secure-Baselines3’s built-in checker, wrap it for monitoring and normalization, and put together it for coaching throughout a number of algorithms. Try the FULL CODES here.

print("n" + "=" * 60)

print("Coaching a number of RL algorithms...")

algorithms = {

"PPO": PPO("MlpPolicy", vec_env, verbose=0, learning_rate=3e-4, n_steps=2048),

"A2C": A2C("MlpPolicy", vec_env, verbose=0, learning_rate=7e-4),

}

outcomes = {}

for identify, mannequin in algorithms.gadgets():

print(f"nTraining {identify}...")

callback = ProgressCallback(check_freq=2000, verbose=0)

mannequin.be taught(total_timesteps=50000, callback=callback, progress_bar=True)

outcomes[name] = {"mannequin": mannequin, "rewards": callback.rewards}

print(f"✓ {identify} coaching full!")

print("n" + "=" * 60)

print("Evaluating skilled fashions...")

eval_env = Monitor(TradingEnv())

for identify, knowledge in outcomes.gadgets():

mean_reward, std_reward = evaluate_policy(knowledge["model"], eval_env, n_eval_episodes=20, deterministic=True)

outcomes[name]["eval_mean"] = mean_reward

outcomes[name]["eval_std"] = std_reward

print(f"{identify}: Imply Reward = {mean_reward:.2f} +/- {std_reward:.2f}")We prepare and consider two totally different reinforcement studying algorithms, PPO and A2C, on our buying and selling atmosphere. We log their efficiency metrics, seize imply rewards, and evaluate how effectively every agent learns worthwhile buying and selling methods via constant exploration and exploitation. Try the FULL CODES here.

print("n" + "=" * 60)

print("Producing visualizations...")

fig, axes = plt.subplots(2, 2, figsize=(14, 10))

ax = axes[0, 0]

for identify, knowledge in outcomes.gadgets():

ax.plot(knowledge["rewards"], label=identify, linewidth=2)

ax.set_xlabel("Coaching Checkpoints (x1000 steps)")

ax.set_ylabel("Imply Episode Reward")

ax.set_title("Coaching Progress Comparability")

ax.legend()

ax.grid(True, alpha=0.3)

ax = axes[0, 1]

names = record(outcomes.keys())

means = [results[n]["eval_mean"] for n in names]

stds = [results[n]["eval_std"] for n in names]

ax.bar(names, means, yerr=stds, capsize=10, alpha=0.7, coloration=['#1f77b4', '#ff7f0e'])

ax.set_ylabel("Imply Reward")

ax.set_title("Analysis Efficiency (20 episodes)")

ax.grid(True, alpha=0.3, axis="y")

ax = axes[1, 0]

best_model = max(outcomes.gadgets(), key=lambda x: x[1]["eval_mean"])[1]["model"]

obs = eval_env.reset()[0]

portfolio_values = [1000]

for _ in vary(200):

motion, _ = best_model.predict(obs, deterministic=True)

obs, reward, accomplished, truncated, data = eval_env.step(motion)

portfolio_values.append(data.get("portfolio", portfolio_values[-1]))

if accomplished:

break

ax.plot(portfolio_values, linewidth=2, coloration="inexperienced")

ax.axhline(y=1000, coloration="crimson", linestyle="--", label="Preliminary Worth")

ax.set_xlabel("Steps")

ax.set_ylabel("Portfolio Worth ($)")

ax.set_title(f"Finest Mannequin ({max(outcomes.gadgets(), key=lambda x: x[1]['eval_mean'])[0]}) Episode")

ax.legend()

ax.grid(True, alpha=0.3)We visualize our coaching outcomes by plotting studying curves, analysis scores, and portfolio trajectories for the best-performing mannequin. We additionally analyze how the agent’s actions translate into portfolio development, which helps us interpret mannequin conduct and assess choice consistency throughout simulated buying and selling classes. Try the FULL CODES here.

ax = axes[1, 1]

obs = eval_env.reset()[0]

actions = []

for _ in vary(200):

motion, _ = best_model.predict(obs, deterministic=True)

actions.append(motion)

obs, _, accomplished, truncated, _ = eval_env.step(motion)

if accomplished:

break

action_names = ['Hold', 'Buy', 'Sell']

action_counts = [actions.count(i) for i in range(3)]

ax.pie(action_counts, labels=action_names, autopct="%1.1f%%", startangle=90, colours=['#ff9999', '#66b3ff', '#99ff99'])

ax.set_title("Motion Distribution (Finest Mannequin)")

plt.tight_layout()

plt.savefig('sb3_advanced_results.png', dpi=150, bbox_inches="tight")

print("✓ Visualizations saved as 'sb3_advanced_results.png'")

plt.present()

print("n" + "=" * 60)

print("Saving and loading fashions...")

best_name = max(outcomes.gadgets(), key=lambda x: x[1]["eval_mean"])[0]

best_model = outcomes[best_name]["model"]

best_model.save(f"best_trading_model_{best_name}")

vec_env.save("vec_normalize.pkl")

loaded_model = PPO.load(f"best_trading_model_{best_name}")

print(f"✓ Finest mannequin ({best_name}) saved and loaded efficiently!")

print("n" + "=" * 60)

print("TUTORIAL COMPLETE!")

print(f"Finest performing algorithm: {best_name}")

print(f"Ultimate analysis rating: {outcomes[best_name]['eval_mean']:.2f}")

print("=" * 60)Lastly, we visualize the motion distribution of the very best agent to know its buying and selling tendencies and save the top-performing mannequin for reuse. We show mannequin loading, verify the very best algorithm, and full the tutorial with a transparent abstract of efficiency outcomes and insights gained.

In conclusion, we now have created, skilled, and in contrast a number of reinforcement studying brokers in a sensible buying and selling simulation utilizing Secure-Baselines3. We observe how every algorithm adapts to market dynamics, visualize their studying traits, and determine essentially the most worthwhile technique. This hands-on implementation strengthens our understanding of RL pipelines and demonstrates how customizable, environment friendly, and scalable Secure-Baselines3 might be for advanced, domain-specific duties reminiscent of monetary modeling.

Try the FULL CODES here. Be happy to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be happy to observe us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.