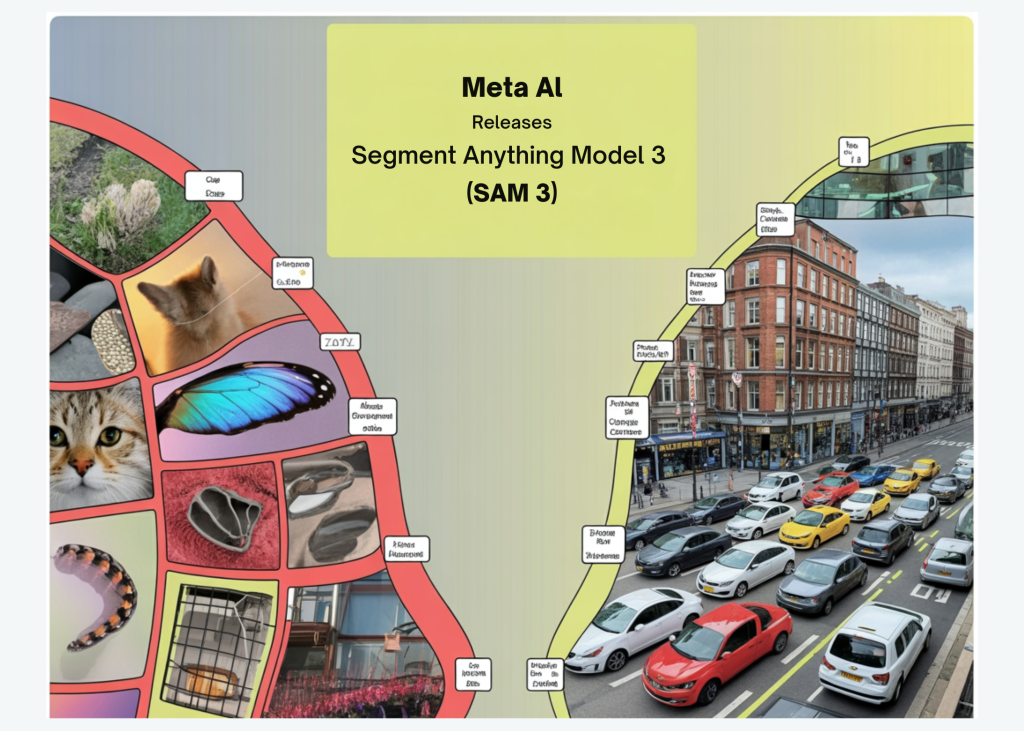

How do you reliably discover, section and monitor each occasion of any idea throughout massive picture and video collections utilizing easy prompts? Meta AI Staff has simply launched Meta Section Something Mannequin 3, or SAM 3, an open-sourced unified basis mannequin for promptable segmentation in photographs and movies that operates immediately on visible ideas as a substitute of solely pixels. It detects, segments and tracks objects from each textual content prompts and visible prompts reminiscent of factors, containers and masks. In contrast with SAM 2, SAM 3 can exhaustively discover all situations of an open vocabulary idea, for instance each ‘crimson baseball cap’ in a protracted video, utilizing a single mannequin.

From Visible Prompts to Promptable Idea Segmentation

Earlier SAM fashions targeted on interactive segmentation. A person clicked or drew a field and the mannequin produced a single masks. That workflow didn’t scale to duties the place a system should discover all situations of an idea throughout massive picture or video collections. SAM 3 formalizes Promptable Idea Segmentation (PCS), which takes idea prompts and returns occasion masks and steady identities for each matching object in photographs and movies.

Idea prompts mix brief noun phrases with visible exemplars. The mannequin helps detailed phrases reminiscent of ‘yellow college bus’ or ‘participant in crimson’ and can even use exemplar crops as optimistic or adverse examples. Textual content prompts describe the idea, whereas exemplar crops assist disambiguate advantageous grained visible variations. SAM 3 will also be used as a imaginative and prescient device inside multimodal massive language fashions that generate longer referring expressions after which name SAM 3 with distilled idea prompts.

Structure, Presence Token and Monitoring Design

The SAM 3 mannequin has 848M parameters and consists of a detector and a tracker that share a single imaginative and prescient encoder. The detector is a DETR primarily based structure that’s conditioned on three inputs, textual content prompts, geometric prompts and picture exemplars. This separates the core picture illustration from the prompting interfaces and lets the identical spine serve many segmentation duties.

A key change in SAM 3 is the presence token. This element predicts whether or not every candidate field or masks truly corresponds to the requested idea. It’s particularly essential when the textual content prompts describe associated entities, reminiscent of ‘a participant in white’ and ‘a participant in crimson’. The presence token reduces confusion between such prompts and improves open vocabulary precision. Recognition, which means classifying a candidate because the idea, is decoupled from localization, which means predicting the field and masks form.

For video, SAM 3 reuses the transformer encoder decoder tracker from SAM 2, however connects it tightly to the brand new detector. The tracker propagates occasion identities throughout frames and helps interactive refinement. The decoupled detector and tracker design minimizes job interference, scales cleanly with extra information and ideas, and nonetheless exposes an interactive interface just like earlier Section Something fashions for level primarily based refinement.

SA-Co Dataset and Benchmark Suite

To coach and consider Promptable Idea Segmentation (PCS), Meta introduces the SA-Co household of datasets and benchmarks. The SA-Co benchmark incorporates 270K distinctive ideas, which is greater than 50 instances the variety of ideas in earlier open vocabulary segmentation benchmarks. Each picture or video is paired with noun phrases and dense occasion masks for all objects that match every phrase, together with adverse prompts the place no objects ought to match.

The related information engine has mechanically annotated greater than 4M distinctive ideas, which makes SA-Co the biggest top quality open vocabulary segmentation corpus as talked about by Meta. The engine combines massive ontologies with automated checks and helps arduous adverse mining, for instance phrases which can be visually comparable however semantically distinct. This scale is crucial for studying a mannequin that may reply robustly to numerous textual content prompts in actual world scenes.

Picture and Video Efficiency

On the SA-Co picture benchmarks, SAM 3 reaches between 75 % and 80 % of human efficiency measured with the cgF1 metric. Competing programs reminiscent of OWLv2, DINO-X and Gemini 2.5 lag considerably behind. For instance, on SA-Co Gold field detection, SAM 3 reviews cgF1 of 55.7, whereas OWLv2 reaches 24.5, DINO-X reaches 22.5 and Gemini 2.5 reaches 14.4. This exhibits {that a} single unified mannequin can outperform specialised detectors on open vocabulary segmentation.

In movies, SAM 3 is evaluated on SA-V, YT-Temporal 1B, SmartGlasses, LVVIS and BURST. On SA-V check it reaches 30.3 cgF1 and 58.0 pHOTA. On YT-Temporal 1B check it reaches 50.8 cgF1 and 69.9 pHOTA. On SmartGlasses check it reaches 36.4 cgF1 and 63.6 pHOTA, whereas on LVVIS and BURST it reaches 36.3 mAP and 44.5 HOTA respectively. These outcomes verify {that a} single structure can deal with each picture PCS and lengthy horizon video monitoring.

SAM 3 as a Information-Centric Benchmarking Alternative for Annotation Platforms

For data-centric platforms like Encord, SAM 3 is a pure subsequent step after their present integrations of SAM and SAM 2 for auto-labeling and video monitoring, which already let clients auto-annotate greater than 90 % of photographs with excessive masks accuracy utilizing basis fashions inside Encord’s QA pushed workflows. Comparable platforms reminiscent of CVAT, SuperAnnotate and Picsellia are standardizing on Section Something model fashions for zero shot labeling, mannequin within the loop annotation and MLOps pipelines. SAM 3’s promptable idea segmentation and unified picture video monitoring create clear editorial and benchmarking alternatives right here, for instance, quantifying label price reductions and high quality features when Encord like stacks transfer from SAM 2 to SAM 3 in dense video datasets or multimodal settings.

Key Takeaways

- SAM 3 unifies picture and video segmentation right into a single 848M parameter basis mannequin that helps textual content prompts, exemplars, factors and containers for Promptable Idea Segmentation.

- The SA-Co information engine and benchmark introduce about 270K evaluated ideas and over 4M mechanically annotated ideas, making SAM 3’s coaching and analysis stack one of many largest open vocabulary segmentation sources obtainable.

- SAM 3 considerably outperforms prior open vocabulary programs, reaching round 75 to 80 % of human cgF1 on SA Co and greater than doubling OWLv2 and DINO-X on key SA-Co Gold detection metrics.

- The structure decouples a DETR primarily based detector from a SAM 2 model video tracker with a presence head, enabling steady occasion monitoring throughout lengthy movies whereas conserving interactive SAM model refinement.

SAM 3 advances Section Something from Promptable Visible Segmentation to Promptable Idea Segmentation in a single 848M parameter mannequin that unifies picture and video. It leverages the SA-Co benchmark with about 270K evaluated ideas and over 4M mechanically annotated ideas to approximate 75 to 80 % of human efficiency on cgF1. The decoupled DETR primarily based detector and SAM 2 model tracker with a presence head makes SAM 3 a sensible imaginative and prescient basis mannequin for brokers and merchandise. Total, SAM 3 is now a reference level for open vocabulary segmentation at manufacturing scale.

Take a look at the Paper, Repo and Model Weights. Be happy to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.