Instagram head Adam Mosseri mentioned AI will change who might be artistic, as the brand new instruments and expertise will give individuals who couldn’t be creators earlier than the flexibility to supply content material at a sure high quality and scale. Nonetheless, he additionally admitted that unhealthy actors will use the expertise for “nefarious functions” and that children rising up right now should be taught which you could’t imagine one thing simply since you noticed a video of it.

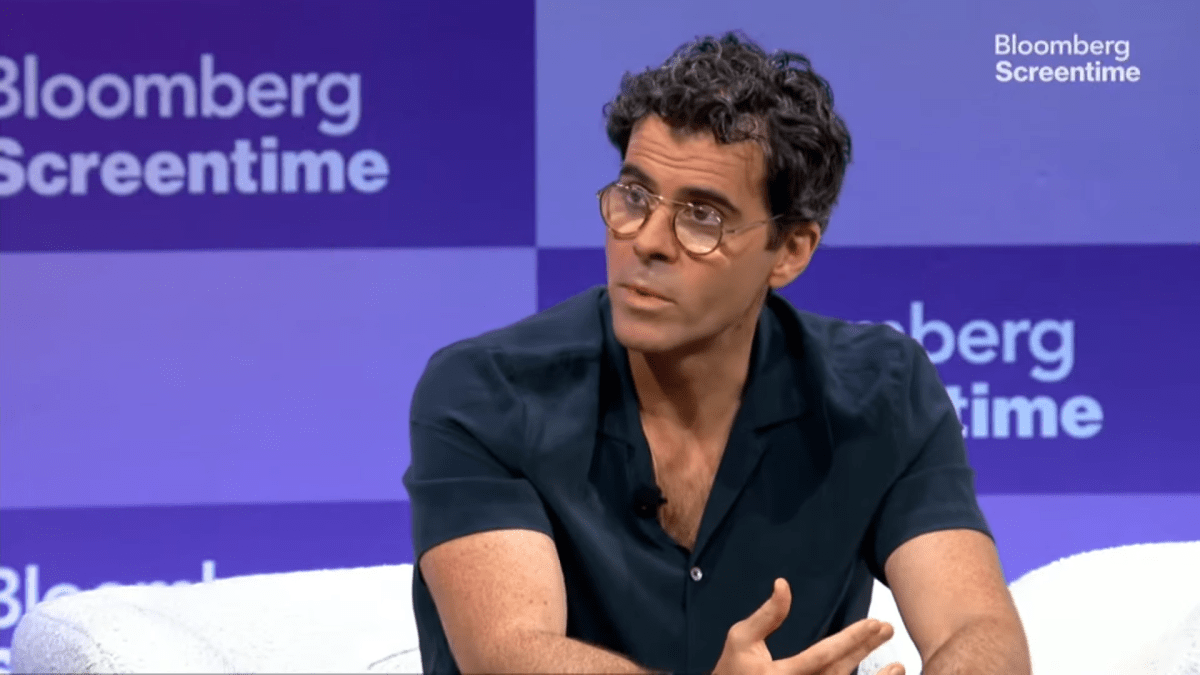

The Meta government shared his ideas on how AI is impacting the creator trade on the Bloomberg Screentime convention this week. On the interview’s begin, Mosseri was requested to deal with the latest feedback from creator MrBeast (Jimmy Donaldson). On Threads, MrBeast had recommended that AI-generated movies may quickly threaten creators’ livelihoods and mentioned it was “scary occasions” for the trade.

Mosseri pushed again a bit at that concept, noting that almost all creators received’t be utilizing AI expertise to breed what MrBeast has traditionally carried out, together with his enormous units and elaborate productions; as an alternative, it should permit creators to do extra and make higher content material.

“For those who take a giant step again, what the web did, amongst different issues, was permit virtually anybody to grow to be a writer by decreasing the price of distributing content material to basically zero,” Mosseri defined. “And what a few of these generative AI fashions appear like they’re going to do is that they’re going to cut back the price of producing content material to principally zero,” he mentioned. (This, in fact, doesn’t mirror the true monetary, environmental, and human prices of utilizing AI, that are substantial.)

As well as, the exec recommended that there’s already plenty of “hybrid” content material on right now’s massive social platforms, the place creators are utilizing AI of their workflow however not producing absolutely artificial content material. As an example, they may be utilizing AI instruments for colour corrections or filters. Going ahead, Mosseri mentioned, the road between what’s actual and what’s AI generated will grow to be much more blurred.

“It’s going to be a little bit bit much less like, what’s natural content material and what’s AI artificial content material, and what the odds are. I feel there’s gonna be really extra within the center than pure artificial content material for some time,” he mentioned.

As issues change, Mosseri mentioned Meta has some duty to do extra by way of figuring out what content material is AI generated. However he additionally famous that the way in which the corporate had gone about this wasn’t the “proper focus” and was virtually “a idiot’s errand.” He was referring to how Meta had initially tried to label AI content material mechanically, which led to a state of affairs the place it was labeling real content as AI, as a result of AI instruments, together with these from Adobe, have been used as a part of the method.

Techcrunch occasion

San Francisco

|

October 27-29, 2025

The chief mentioned that the labeling system wants extra work however that Meta also needs to present extra context that helps folks make knowledgeable selections.

Whereas he didn’t elaborate on what that newly added context could be, he might have been occupied with Meta’s Group Notes characteristic, which is the crowdsourced fact-checking system launched within the U.S. this 12 months, modeled on the one X makes use of. As an alternative of turning to third-party reality checkers, Group Notes and comparable programs mark content material with corrections or extra context when customers who typically share opposing opinions agree {that a} fact-check or additional rationalization is required. It’s possible that Meta could possibly be weighing the usage of such a system for flagging when one thing is AI generated however hasn’t been labeled as such.

Quite than saying it was absolutely the platform’s duty to label AI content material, Mosseri recommended that society itself must change.

“My youngsters are younger. They’re 9, seven, and 5. I want them to grasp, as they develop up they usually get uncovered to the web, that simply because they’re seeing a video of one thing doesn’t imply it really occurred,” he defined. “After I grew up, and I noticed a video, I may assume that that was a seize of a second that occurred in the true world,” Mosseri continued.

“What they’re going to … want to consider who’s saying it, who’s sharing it, on this case, and what are their incentives, and why may they be saying it,” he concluded. (That looks as if a heavy psychological load for younger youngsters, however alas.)

Within the dialogue, Mosseri additionally touched on different subjects about the way forward for Instagram past AI, together with its plans for a dedicated TV app and its newer deal with Reels and DMs as its core options (which Mosseri mentioned simply mirrored consumer developments), and the way TikTok’s altering possession within the U.S. will influence the aggressive panorama.

On the latter, he mentioned that, in the end, it’s higher to have competitors, as TikTok’s U.S. presence has compelled Instagram to “do higher work.” As for the TikTok deal itself, Mosseri mentioned it’s exhausting to parse, however it looks as if how the app has been constructed won’t meaningfully change.”

“It’s the identical app, the identical rating system, the identical creators that you just’re following — the identical folks. It’s all kind of seamless,” Mosseri mentioned of the “new” TikTok U.S. operation. “It doesn’t seem to be it’s a significant change by way of incentives,” he added.