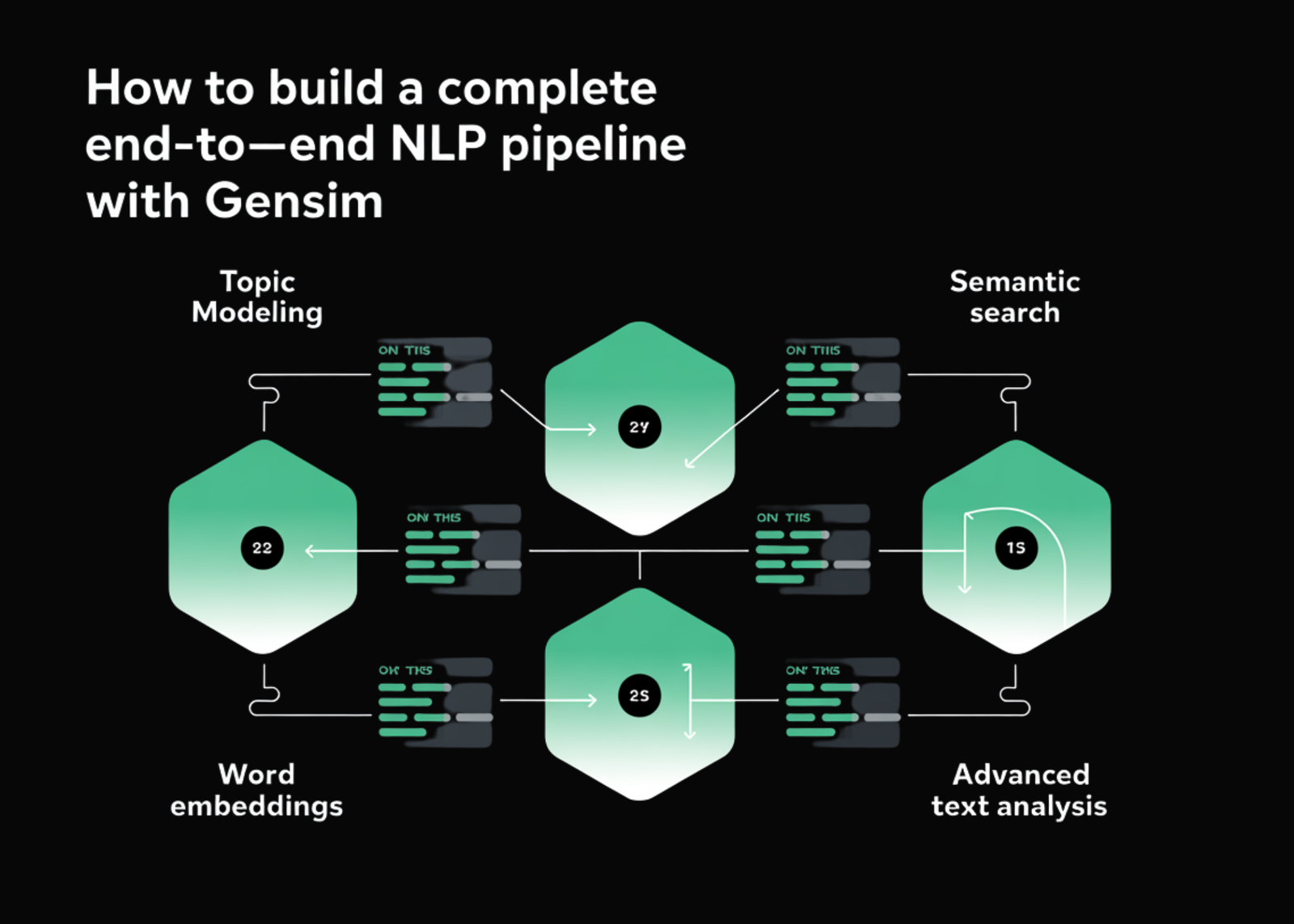

On this tutorial, we current a whole end-to-end Pure Language Processing (NLP) pipeline constructed with Gensim and supporting libraries, designed to run seamlessly in Google Colab. It integrates a number of core strategies in fashionable NLP, together with preprocessing, matter modeling with Latent Dirichlet Allocation (LDA), phrase embeddings with Word2Vec, TF-IDF-based similarity evaluation, and semantic search. The pipeline not solely demonstrates how you can practice and consider these fashions but in addition showcases sensible visualizations, superior matter evaluation, and doc classification workflows. By combining statistical strategies with machine studying approaches, the tutorial gives a complete framework for understanding and experimenting with textual content information at scale. Take a look at the FULL CODES here.

!pip set up --upgrade scipy==1.11.4

!pip set up gensim==4.3.2 nltk wordcloud matplotlib seaborn pandas numpy scikit-learn

!pip set up --upgrade setuptools

print("Please restart runtime after set up!")

print("Go to Runtime > Restart runtime, then run the following cell")

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from wordcloud import WordCloud

import warnings

warnings.filterwarnings('ignore')

from gensim import corpora, fashions, similarities

from gensim.fashions import Word2Vec, LdaModel, TfidfModel, CoherenceModel

from gensim.parsing.preprocessing import preprocess_string, strip_tags, strip_punctuation, strip_multiple_whitespaces, strip_numeric, remove_stopwords, strip_short

import nltk

nltk.obtain('punkt', quiet=True)

nltk.obtain('stopwords', quiet=True)

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenizeWe set up and improve the mandatory libraries, similar to SciPy, Gensim, NLTK, and visualization instruments, to make sure compatibility. We then import all required modules for preprocessing, modeling, and evaluation. We additionally obtain NLTK assets to tokenize and deal with stopwords effectively, thereby organising the setting for our NLP pipeline. Take a look at the FULL CODES here.

class AdvancedGensimPipeline:

def __init__(self):

self.dictionary = None

self.corpus = None

self.lda_model = None

self.word2vec_model = None

self.tfidf_model = None

self.similarity_index = None

self.processed_docs = None

def create_sample_corpus(self):

"""Create a various pattern corpus for demonstration"""

paperwork = [ "Data science combines statistics, programming, and domain expertise to extract insights",

"Big data analytics helps organizations make data-driven decisions at scale",

"Cloud computing provides scalable infrastructure for modern applications and services",

"Cybersecurity protects digital systems from threats and unauthorized access attempts",

"Software engineering practices ensure reliable and maintainable code development",

"Database management systems store and organize large amounts of structured information",

"Python programming language is widely used for data analysis and machine learning",

"Statistical modeling helps identify patterns and relationships in complex datasets",

"Cross-validation techniques ensure robust model performance evaluation and selection",

"Recommendation systems suggest relevant items based on user preferences and behavior",

"Text mining extracts valuable insights from unstructured textual data sources",

"Image classification assigns predefined categories to visual content automatically",

"Reinforcement learning trains agents through interaction with dynamic environments"

]

return paperwork

def preprocess_documents(self, paperwork):

"""Superior doc preprocessing utilizing Gensim filters"""

print("Preprocessing paperwork...")

CUSTOM_FILTERS = [

strip_tags, strip_punctuation, strip_multiple_whitespaces,

strip_numeric, remove_stopwords, strip_short, lambda x: x.lower()

]

processed_docs = []

for doc in paperwork:

processed = preprocess_string(doc, CUSTOM_FILTERS)

stop_words = set(stopwords.phrases('english'))

processed = [word for word in processed if word not in stop_words and len(word) > 2]

processed_docs.append(processed)

self.processed_docs = processed_docs

print(f"Processed {len(processed_docs)} paperwork")

return processed_docs

def create_dictionary_and_corpus(self):

"""Create Gensim dictionary and corpus"""

print("Creating dictionary and corpus...")

self.dictionary = corpora.Dictionary(self.processed_docs)

self.dictionary.filter_extremes(no_below=2, no_above=0.8)

self.corpus = [self.dictionary.doc2bow(doc) for doc in self.processed_docs]

print(f"Dictionary dimension: {len(self.dictionary)}")

print(f"Corpus dimension: {len(self.corpus)}")

def train_word2vec_model(self):

"""Prepare Word2Vec mannequin for phrase embeddings"""

print("Coaching Word2Vec mannequin...")

self.word2vec_model = Word2Vec(

sentences=self.processed_docs,

vector_size=100,

window=5,

min_count=2,

employees=4,

epochs=50

)

print("Word2Vec mannequin skilled efficiently")

def analyze_word_similarities(self):

"""Analyze phrase similarities utilizing Word2Vec"""

print("n=== Word2Vec Similarity Evaluation ===")

test_words = ['machine', 'data', 'learning', 'computer']

for phrase in test_words:

if phrase in self.word2vec_model.wv:

similar_words = self.word2vec_model.wv.most_similar(phrase, topn=3)

print(f"Phrases much like '{phrase}': {similar_words}")

strive:

if all(w in self.word2vec_model.wv for w in ['machine', 'computer', 'data']):

analogy = self.word2vec_model.wv.most_similar(

constructive=['computer', 'data'],

unfavorable=['machine'],

topn=1

)

print(f"Analogy end result: {analogy}")

besides:

print("Not sufficient vocabulary for complicated analogies")

def train_lda_model(self, num_topics=5):

"""Prepare LDA matter mannequin"""

print(f"Coaching LDA mannequin with {num_topics} matters...")

self.lda_model = LdaModel(

corpus=self.corpus,

id2word=self.dictionary,

num_topics=num_topics,

random_state=42,

passes=10,

alpha="auto",

per_word_topics=True,

eval_every=None

)

print("LDA mannequin skilled efficiently")

def evaluate_topic_coherence(self):

"""Consider matter mannequin coherence"""

print("Evaluating matter coherence...")

coherence_model = CoherenceModel(

mannequin=self.lda_model,

texts=self.processed_docs,

dictionary=self.dictionary,

coherence="c_v"

)

coherence_score = coherence_model.get_coherence()

print(f"Matter Coherence Rating: {coherence_score:.4f}")

return coherence_score

def display_topics(self):

"""Show found matters"""

print("n=== Found Subjects ===")

matters = self.lda_model.print_topics(num_words=8)

for idx, matter in enumerate(matters):

print(f"Matter {idx}: {matter[1]}")

def create_tfidf_model(self):

"""Create TF-IDF mannequin for doc similarity"""

print("Creating TF-IDF mannequin...")

self.tfidf_model = TfidfModel(self.corpus)

corpus_tfidf = self.tfidf_model[self.corpus]

self.similarity_index = similarities.MatrixSimilarity(corpus_tfidf)

print("TF-IDF mannequin and similarity index created")

def find_similar_documents(self, query_doc_idx=0):

"""Discover paperwork much like a question doc"""

print(f"n=== Doc Similarity Evaluation ===")

query_doc_tfidf = self.tfidf_model[self.corpus[query_doc_idx]]

similarities_scores = self.similarity_index[query_doc_tfidf]

sorted_similarities = sorted(enumerate(similarities_scores), key=lambda x: x[1], reverse=True)

print(f"Paperwork most much like doc {query_doc_idx}:")

for doc_idx, similarity in sorted_similarities[:5]:

print(f"Doc {doc_idx}: {similarity:.4f}")

def visualize_topics(self):

"""Create visualizations for matter evaluation"""

print("Creating matter visualizations...")

doc_topic_matrix = []

for doc_bow in self.corpus:

doc_topics = dict(self.lda_model.get_document_topics(doc_bow, minimum_probability=0))

topic_vec = [doc_topics.get(i, 0) for i in range(self.lda_model.num_topics)]

doc_topic_matrix.append(topic_vec)

doc_topic_df = pd.DataFrame(doc_topic_matrix, columns=[f'Topic_{i}' for i in range(self.lda_model.num_topics)])

plt.determine(figsize=(12, 8))

sns.heatmap(doc_topic_df.T, annot=True, cmap='Blues', fmt=".2f")

plt.title('Doc-Matter Distribution Heatmap')

plt.xlabel('Paperwork')

plt.ylabel('Subjects')

plt.tight_layout()

plt.present()

fig, axes = plt.subplots(2, 3, figsize=(15, 10))

axes = axes.flatten()

for topic_id in vary(min(6, self.lda_model.num_topics)):

topic_words = dict(self.lda_model.show_topic(topic_id, topn=20))

wordcloud = WordCloud(

width=300, top=200,

background_color="white",

colormap='viridis'

).generate_from_frequencies(topic_words)

axes[topic_id].imshow(wordcloud, interpolation='bilinear')

axes[topic_id].set_title(f'Matter {topic_id}')

axes[topic_id].axis('off')

for i in vary(self.lda_model.num_topics, 6):

axes[i].axis('off')

plt.tight_layout()

plt.present()

def advanced_topic_analysis(self):

"""Carry out superior matter evaluation"""

print("n=== Superior Matter Evaluation ===")

topic_distributions = []

for i, doc_bow in enumerate(self.corpus):

doc_topics = self.lda_model.get_document_topics(doc_bow)

dominant_topic = max(doc_topics, key=lambda x: x[1]) if doc_topics else (0, 0)

topic_distributions.append({

'doc_id': i,

'dominant_topic': dominant_topic[0],

'topic_probability': dominant_topic[1]

})

topic_df = pd.DataFrame(topic_distributions)

plt.determine(figsize=(10, 6))

topic_counts = topic_df['dominant_topic'].value_counts().sort_index()

plt.bar(vary(len(topic_counts)), topic_counts.values)

plt.xlabel('Matter ID')

plt.ylabel('Variety of Paperwork')

plt.title('Distribution of Dominant Subjects Throughout Paperwork')

plt.xticks(vary(len(topic_counts)), [f'Topic {i}' for i in topic_counts.index])

plt.present()

return topic_df

def document_classification_demo(self, new_document):

"""Classify a brand new doc utilizing skilled fashions"""

print(f"n=== Doc Classification Demo ===")

print(f"Classifying: '{new_document[:50]}...'")

processed_new = preprocess_string(new_document, [

strip_tags, strip_punctuation, strip_multiple_whitespaces,

strip_numeric, remove_stopwords, strip_short, lambda x: x.lower()

])

new_doc_bow = self.dictionary.doc2bow(processed_new)

doc_topics = self.lda_model.get_document_topics(new_doc_bow)

print("Matter chances:")

for topic_id, prob in doc_topics:

print(f" Matter {topic_id}: {prob:.4f}")

new_doc_tfidf = self.tfidf_model[new_doc_bow]

similarities_scores = self.similarity_index[new_doc_tfidf]

most_similar = np.argmax(similarities_scores)

print(f"Most comparable doc: {most_similar} (similarity: {similarities_scores[most_similar]:.4f})")

return doc_topics, most_similar

def run_complete_pipeline(self):

"""Execute the entire NLP pipeline"""

print("=== Superior Gensim NLP Pipeline ===n")

raw_documents = self.create_sample_corpus()

self.preprocess_documents(raw_documents)

self.create_dictionary_and_corpus()

self.train_word2vec_model()

self.train_lda_model(num_topics=5)

self.create_tfidf_model()

self.analyze_word_similarities()

coherence_score = self.evaluate_topic_coherence()

self.display_topics()

self.visualize_topics()

topic_df = self.advanced_topic_analysis()

self.find_similar_documents(query_doc_idx=0)

new_doc = "Deep neural networks are highly effective machine studying fashions for sample recognition"

self.document_classification_demo(new_doc)

return {

'coherence_score': coherence_score,

'topic_distributions': topic_df,

'fashions': {

'lda': self.lda_model,

'word2vec': self.word2vec_model,

'tfidf': self.tfidf_model

}

}We outline the AdvancedGensimPipeline class as a modular framework to deal with each stage of textual content evaluation in a single place. It begins with making a pattern corpus, preprocessing it, after which constructing a dictionary and corpus representations. We practice Word2Vec for embeddings, LDA for matter modeling, and TF-IDF for similarity, adopted by visualization, coherence analysis, and classification of latest paperwork. This fashion, we convey collectively the entire NLP workflow, from uncooked textual content to insights, right into a single reusable pipeline. Take a look at the FULL CODES here.

def compare_topic_models(pipeline, topic_range=[3, 5, 7, 10]):

print("n=== Matter Mannequin Comparability ===")

coherence_scores = []

perplexity_scores = []

for num_topics in topic_range:

lda_temp = LdaModel(

corpus=pipeline.corpus,

id2word=pipeline.dictionary,

num_topics=num_topics,

random_state=42,

passes=10,

alpha="auto"

)

coherence_model = CoherenceModel(

mannequin=lda_temp,

texts=pipeline.processed_docs,

dictionary=pipeline.dictionary,

coherence="c_v"

)

coherence = coherence_model.get_coherence()

coherence_scores.append(coherence)

perplexity = lda_temp.log_perplexity(pipeline.corpus)

perplexity_scores.append(perplexity)

print(f"Subjects: {num_topics}, Coherence: {coherence:.4f}, Perplexity: {perplexity:.4f}")

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(15, 5))

ax1.plot(topic_range, coherence_scores, 'bo-')

ax1.set_xlabel('Variety of Subjects')

ax1.set_ylabel('Coherence Rating')

ax1.set_title('Mannequin Coherence vs Variety of Subjects')

ax1.grid(True)

ax2.plot(topic_range, perplexity_scores, 'ro-')

ax2.set_xlabel('Variety of Subjects')

ax2.set_ylabel('Perplexity')

ax2.set_title('Mannequin Perplexity vs Variety of Subjects')

ax2.grid(True)

plt.tight_layout()

plt.present()

return coherence_scores, perplexity_scoresThis perform compare_topic_models lets us systematically take a look at completely different numbers of matters for the LDA mannequin and evaluate their efficiency. We calculate coherence scores (to examine matter interpretability) and perplexity scores (to examine mannequin match) for every matter rely within the given vary. The outcomes are displayed as line plots, serving to us visually determine probably the most balanced variety of matters for our dataset. Take a look at the FULL CODES here.

def semantic_search_engine(pipeline, question, top_k=5):

"""Implement semantic search utilizing skilled fashions"""

print(f"n=== Semantic Search: '{question}' ===")

processed_query = preprocess_string(question, [

strip_tags, strip_punctuation, strip_multiple_whitespaces,

strip_numeric, remove_stopwords, strip_short, lambda x: x.lower()

])

query_bow = pipeline.dictionary.doc2bow(processed_query)

query_tfidf = pipeline.tfidf_model[query_bow]

similarities_scores = pipeline.similarity_index[query_tfidf]

top_indices = np.argsort(similarities_scores)[::-1][:top_k]

print("High matching paperwork:")

for i, idx in enumerate(top_indices):

rating = similarities_scores[idx]

print(f"{i+1}. Doc {idx} (Rating: {rating:.4f})")

print(f" Content material: {' '.be a part of(pipeline.processed_docs[idx][:10])}...")

return top_indices, similarities_scores[top_indices]The semantic_search_engine perform provides a search layer to the pipeline by taking a question, preprocessing it, and changing it right into a bag-of-words and TF-IDF representations. It then compares the question in opposition to all paperwork utilizing the similarity index and returns the highest matches. This fashion, we are able to shortly retrieve probably the most related paperwork together with their similarity scores, making the pipeline helpful for sensible info retrieval and semantic search duties. Take a look at the FULL CODES here.

if __name__ == "__main__":

pipeline = AdvancedGensimPipeline()

outcomes = pipeline.run_complete_pipeline()

print("n" + "="*60)

coherence_scores, perplexity_scores = compare_topic_models(pipeline)

print("n" + "="*60)

search_results = semantic_search_engine(

pipeline,

"synthetic intelligence neural networks deep studying"

)

print("n" + "="*60)

print("Pipeline accomplished efficiently!")

print(f"Ultimate coherence rating: {outcomes['coherence_score']:.4f}")

print(f"Vocabulary dimension: {len(pipeline.dictionary)}")

print(f"Word2Vec mannequin dimension: {pipeline.word2vec_model.wv.vector_size} dimensions")

print("nModels skilled and prepared to be used!")

print("Entry fashions through: pipeline.lda_model, pipeline.word2vec_model, pipeline.tfidf_model")This essential block ties all the things collectively into a whole, executable pipeline. We initialize the AdvancedGensimPipeline, run the total workflow, after which consider matter fashions with completely different numbers of matters. Subsequent, we take a look at the semantic search engine with a question about synthetic intelligence and deep studying. Lastly, it prints out abstract metrics, such because the coherence rating, vocabulary dimension, and Word2Vec embedding dimensions, confirming that each one fashions are skilled and prepared for additional use.

In conclusion, we achieve a strong, modular workflow that covers your entire spectrum of textual content evaluation, from cleansing and preprocessing uncooked paperwork to discovering hidden matters, visualizing outcomes, evaluating fashions, and performing semantic search. The inclusion of Word2Vec embeddings, TF-IDF similarity, and coherence analysis ensures that the pipeline is each versatile and sturdy, whereas visualizations and classification demos make the outcomes interpretable and actionable. This cohesive design permits learners, researchers, and practitioners to shortly adapt the framework for real-world purposes, making it a precious basis for superior NLP experimentation and production-ready textual content analytics.

Take a look at the FULL CODES here. Be at liberty to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.