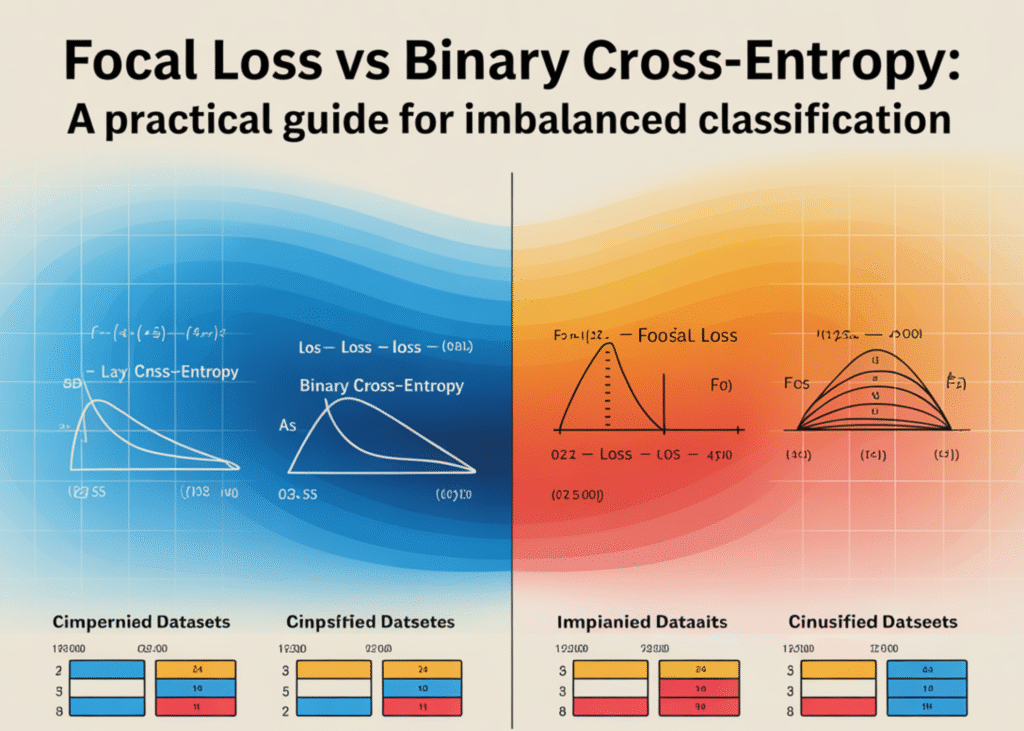

Binary cross-entropy (BCE) is the default loss operate for binary classification—nevertheless it breaks down badly on imbalanced datasets. The reason being delicate however vital: BCE weighs errors from each courses equally, even when one class is extraordinarily uncommon.

Think about two predictions: a minority-class pattern with true label 1 predicted at 0.3, and a majority-class pattern with true label 0 predicted at 0.7. Each produce the identical BCE worth: −log(0.3). However ought to these two errors be handled equally? In an imbalanced dataset, undoubtedly not—the error on the minority pattern is much extra pricey.

That is precisely the place Focal Loss is available in. It reduces the contribution of simple, assured predictions and amplifies the affect of inauspicious, minority-class examples. In consequence, the mannequin focuses much less on the overwhelmingly simple majority class and extra on the patterns that truly matter. Take a look at the FULL CODES here.

On this tutorial, we show this impact by coaching two equivalent neural networks on a dataset with a 99:1 imbalance ratio—one utilizing BCE and the opposite utilizing Focal Loss—and evaluating their conduct, resolution areas, and confusion matrices. Take a look at the FULL CODES here.

Putting in the dependencies

pip set up numpy pandas matplotlib scikit-learn torchCreating an Imbalanced Dataset

We create an artificial binary classification dataset with a 99:1 imbalance with 6000 samples utilizing make_classification. This ensures that the majority samples belong to the bulk class, making it a really perfect setup to show why BCE struggles and the way Focal Loss helps. Take a look at the FULL CODES here.

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

import torch

import torch.nn as nn

import torch.optim as optim

# Generate imbalanced dataset

X, y = make_classification(

n_samples=6000,

n_features=2,

n_redundant=0,

n_clusters_per_class=1,

weights=[0.99, 0.01],

class_sep=1.5,

random_state=42

)

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.3, random_state=42

)

X_train = torch.tensor(X_train, dtype=torch.float32)

y_train = torch.tensor(y_train, dtype=torch.float32).unsqueeze(1)

X_test = torch.tensor(X_test, dtype=torch.float32)

y_test = torch.tensor(y_test, dtype=torch.float32).unsqueeze(1)Creating the Neural Community

We outline a easy neural community with two hidden layers to maintain the experiment light-weight and centered on the loss features. This small structure is ample to study the choice boundary in our 2D dataset whereas clearly highlighting the variations between BCE and Focal Loss. Take a look at the FULL CODES here.

class SimpleNN(nn.Module):

def __init__(self):

tremendous().__init__()

self.layers = nn.Sequential(

nn.Linear(2, 16),

nn.ReLU(),

nn.Linear(16, 8),

nn.ReLU(),

nn.Linear(8, 1),

nn.Sigmoid()

)

def ahead(self, x):

return self.layers(x)Focal Loss Implementation

This class implements the Focal Loss operate, which modifies binary cross-entropy by down-weighting simple examples and focusing the coaching on laborious, misclassified samples. The gamma time period controls how aggressively simple samples are suppressed, whereas alpha assigns increased weight to the minority class. Collectively, they assist the mannequin study higher on imbalanced datasets. Take a look at the FULL CODES here.

class FocalLoss(nn.Module):

def __init__(self, alpha=0.25, gamma=2):

tremendous().__init__()

self.alpha = alpha

self.gamma = gamma

def ahead(self, preds, targets):

eps = 1e-7

preds = torch.clamp(preds, eps, 1 - eps)

pt = torch.the place(targets == 1, preds, 1 - preds)

loss = -self.alpha * (1 - pt) ** self.gamma * torch.log(pt)

return loss.imply()Coaching the Mannequin

We outline a easy coaching loop that optimizes the mannequin utilizing the chosen loss operate and evaluates accuracy on the check set. We then practice two equivalent neural networks — one with commonplace BCE loss and the opposite with Focal Loss — permitting us to instantly examine how every loss operate performs on the identical imbalanced dataset. The printed accuracies spotlight the efficiency hole between BCE and Focal Loss.

Though BCE exhibits a really excessive accuracy (98%), that is deceptive as a result of the dataset is closely imbalanced — predicting virtually the whole lot as the bulk class nonetheless yields excessive accuracy. Focal Loss, alternatively, improves minority-class detection, which is why its barely increased accuracy (99%) is much extra significant on this context. Take a look at the FULL CODES here.

def practice(mannequin, loss_fn, lr=0.01, epochs=30):

choose = optim.Adam(mannequin.parameters(), lr=lr)

for _ in vary(epochs):

preds = mannequin(X_train)

loss = loss_fn(preds, y_train)

choose.zero_grad()

loss.backward()

choose.step()

with torch.no_grad():

test_preds = mannequin(X_test)

test_acc = ((test_preds > 0.5).float() == y_test).float().imply().merchandise()

return test_acc, test_preds.squeeze().detach().numpy()

# Fashions

model_bce = SimpleNN()

model_focal = SimpleNN()

acc_bce, preds_bce = practice(model_bce, nn.BCELoss())

acc_focal, preds_focal = practice(model_focal, FocalLoss(alpha=0.25, gamma=2))

print("Check Accuracy (BCE):", acc_bce)

print("Check Accuracy (Focal Loss):", acc_focal)Plotting the Resolution Boundary

The BCE mannequin produces an virtually flat resolution boundary that predicts solely the bulk class, fully ignoring the minority samples. This occurs as a result of, in an imbalanced dataset, BCE is dominated by the majority-class examples and learns to categorise almost the whole lot as that class. In distinction, the Focal Loss mannequin exhibits a way more refined and significant resolution boundary, efficiently figuring out extra minority-class areas and capturing patterns BCE fails to study. Take a look at the FULL CODES here.

def plot_decision_boundary(mannequin, title):

# Create a grid

x_min, x_max = X[:,0].min()-1, X[:,0].max()+1

y_min, y_max = X[:,1].min()-1, X[:,1].max()+1

xx, yy = np.meshgrid(

np.linspace(x_min, x_max, 300),

np.linspace(y_min, y_max, 300)

)

grid = torch.tensor(np.c_[xx.ravel(), yy.ravel()], dtype=torch.float32)

with torch.no_grad():

Z = mannequin(grid).reshape(xx.form)

# Plot

plt.contourf(xx, yy, Z, ranges=[0,0.5,1], alpha=0.4)

plt.scatter(X[:,0], X[:,1], c=y, cmap='coolwarm', s=10)

plt.title(title)

plt.present()

plot_decision_boundary(model_bce, "Resolution Boundary -- BCE Loss")

plot_decision_boundary(model_focal, "Resolution Boundary -- Focal Loss")Plotting the Confusion Matrix

Within the BCE mannequin’s confusion matrix, the community appropriately identifies just one minority-class pattern, whereas misclassifying 27 of them as majority class. This exhibits that BCE collapses towards predicting virtually the whole lot as the bulk class as a result of imbalance. In distinction, the Focal Loss mannequin appropriately predicts 14 minority samples and reduces misclassifications from 27 all the way down to 14. This demonstrates how Focal Loss locations extra emphasis on laborious, minority-class examples, enabling the mannequin to study a choice boundary that truly captures the uncommon class as an alternative of ignoring it. Take a look at the FULL CODES here.

from sklearn.metrics import confusion_matrix, ConfusionMatrixDisplay

def plot_conf_matrix(y_true, y_pred, title):

cm = confusion_matrix(y_true, y_pred)

disp = ConfusionMatrixDisplay(confusion_matrix=cm)

disp.plot(cmap="Blues", values_format="d")

plt.title(title)

plt.present()

# Convert torch tensors to numpy

y_test_np = y_test.numpy().astype(int)

preds_bce_label = (preds_bce > 0.5).astype(int)

preds_focal_label = (preds_focal > 0.5).astype(int)

plot_conf_matrix(y_test_np, preds_bce_label, "Confusion Matrix -- BCE Loss")

plot_conf_matrix(y_test_np, preds_focal_label, "Confusion Matrix -- Focal Loss")Take a look at the FULL CODES here. Be happy to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be happy to observe us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

I’m a Civil Engineering Graduate (2022) from Jamia Millia Islamia, New Delhi, and I’ve a eager curiosity in Knowledge Science, particularly Neural Networks and their software in numerous areas.