On the earth of Massive Language Fashions (LLMs), velocity is the one function that issues as soon as accuracy is solved. For a human, ready 1 second for a search result’s positive. For an AI agent performing 10 sequential searches to resolve a posh activity, a 1-second delay per search creates a 10-second lag. This latency kills the person expertise.

Exa, the search engine startup previously generally known as Metaphor, simply launched Exa Immediate. It’s a search mannequin designed to supply the world’s internet information to AI brokers in beneath 200ms. For software program engineers and information scientists constructing Retrieval-Augmented Technology (RAG) pipelines, this removes the most important bottleneck in agentic workflows.

Why Latency is the Enemy of RAG

If you construct a RAG utility, your system follows a loop: the person asks a query, your system searches the net for context, and the LLM processes that context. If the search step takes 700ms to 1000ms, the full ‘time to first token’ turns into sluggish.

Exa Immediate delivers outcomes with a latency between 100ms and 200ms. In assessments performed from the us-west-1 (northern california) area, the community latency was roughly 50ms. This velocity permits brokers to carry out a number of searches in a single ‘thought’ course of with out the person feeling a delay.

No Extra ‘Wrapping’ Google

Most search APIs obtainable right now are ‘wrappers.’ They ship a question to a conventional search engine like Google or Bing, scrape the outcomes, and ship them again to you. This provides layers of overhead.

Exa Immediate is totally different. It’s constructed on a proprietary, end-to-end neural search and retrieval stack. As a substitute of matching key phrases, Exa makes use of embeddings and transformers to grasp the that means of a question. This neural method ensures the outcomes are related to the AI’s intent, not simply the precise phrases used. By proudly owning all the stack from the crawler to the inference engine, Exa can optimize for velocity in ways in which ‘wrapper’ APIs can’t.

Benchmarking the Pace

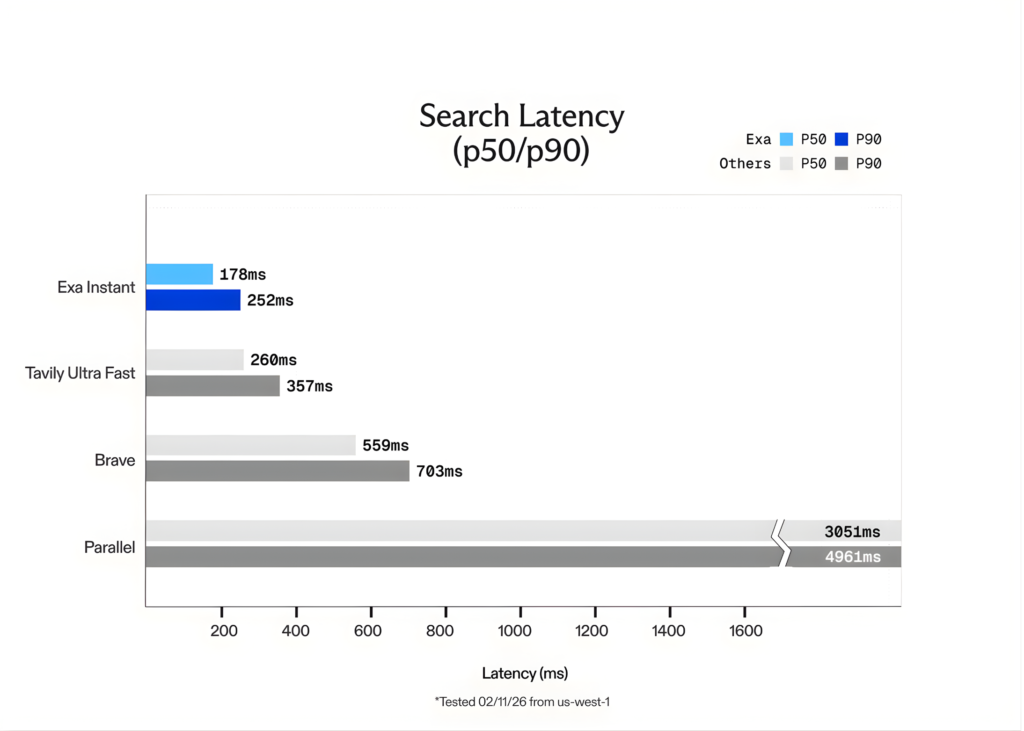

The Exa staff benchmarked Exa Immediate in opposition to different well-liked choices like Tavily Extremely Quick and Courageous. To make sure the assessments had been truthful and averted ‘cached’ outcomes, the staff used the SealQA question dataset. Additionally they added random phrases generated by GPT-5 to every question to drive the engine to carry out a contemporary search each time.

The outcomes confirmed that Exa Immediate is as much as 15x quicker than rivals. Whereas Exa presents different fashions like Exa Quick and Exa Auto for higher-quality reasoning, Exa Immediate is the clear selection for real-time purposes the place each millisecond counts.

Pricing and Developer Integration

The transition to Exa Immediate is straightforward. The API is accessible via the dashboard.exa.ai platform.

- Price: Exa Immediate is priced at $5 per 1,000 requests.

- Capability: It searches the identical huge index of the net as Exa’s extra highly effective fashions.

- Accuracy: Whereas designed for velocity, it maintains excessive relevance. For specialised entity searches, Exa’s Websets product stays the gold commonplace, proving to be 20x extra right than Google for advanced queries.

The API returns clear content material prepared for LLMs, eradicating the necessity for builders to jot down customized scraping or HTML cleansing code.

Key Takeaways

- Sub-200ms Latency for Actual-Time Brokers: Exa Immediate is optimized for ‘agentic’ workflows the place velocity is a bottleneck. By delivering ends in beneath 200ms (and community latency as little as 50ms), it permits AI brokers to carry out multi-step reasoning and parallel searches with out the lag related to conventional search engines like google and yahoo.

- Proprietary Neural Stack vs. ‘Wrappers‘: In contrast to many search APIs that merely ‘wrap’ Google or Bing (including 700ms+ of overhead), Exa Immediate is constructed on a proprietary, end-to-end neural search engine. It makes use of a customized transformer-based structure to index and retrieve internet information, providing as much as 15x quicker efficiency than present alternate options like Tavily or Courageous.

- Price-Environment friendly Scaling: The mannequin is designed to make search a ‘primitive’ quite than an costly luxurious. It’s priced at $5 per 1,000 requests, permitting builders to combine real-time internet lookups at each step of an agent’s thought course of with out breaking the price range.

- Semantic Intent over Key phrases: Exa Immediate leverages embeddings to prioritize the ‘that means’ of a question quite than precise phrase matches. That is significantly efficient for RAG (Retrieval-Augmented Technology) purposes, the place discovering ‘link-worthy’ content material that matches an LLM’s context is extra priceless than easy key phrase hits.

- Optimized for LLM Consumption: The API gives extra than simply URLs; it presents clear, parsed HTML, Markdown, and token-efficient highlights. This reduces the necessity for customized scraping scripts and minimizes the variety of tokens the LLM must course of, additional rushing up all the pipeline.

Take a look at the Technical details. Additionally, be at liberty to comply with us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.