On this tutorial, we reveal an entire, superior implementation of the Notte AI Agent, integrating the Gemini API to energy reasoning and automation. By combining Notte’s browser automation capabilities with structured outputs by Pydantic fashions, it showcases how an AI internet agent can analysis merchandise, monitor social media, analyze markets, scan job alternatives, and extra. The tutorial is designed as a sensible, hands-on information, that includes modular capabilities, demos, and workflows that reveal how builders can leverage AI-driven automation for real-world duties akin to e-commerce analysis, aggressive intelligence, and content material technique. Try the FULL CODES here.

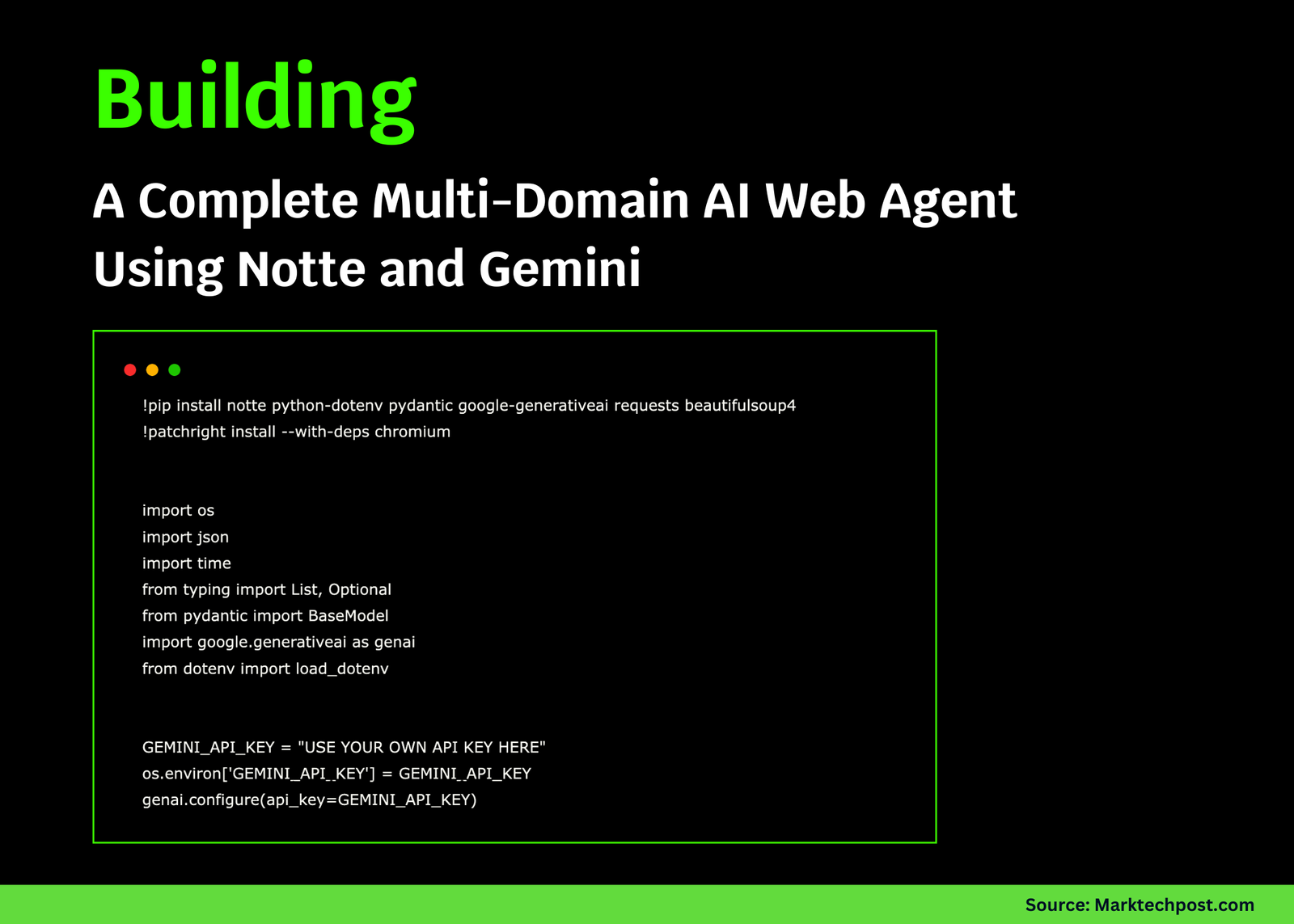

!pip set up notte python-dotenv pydantic google-generativeai requests beautifulsoup4

!patchright set up --with-deps chromium

import os

import json

import time

from typing import Listing, Non-obligatory

from pydantic import BaseModel

import google.generativeai as genai

from dotenv import load_dotenv

GEMINI_API_KEY = "USE YOUR OWN API KEY HERE"

os.environ['GEMINI_API_KEY'] = GEMINI_API_KEY

genai.configure(api_key=GEMINI_API_KEY)

import notteWe start by putting in all of the required dependencies, together with Notte, Gemini, and supporting libraries, after which configure our Gemini API key for authentication. After organising the setting, we import Notte to start out constructing and working our AI internet agent seamlessly. Try the FULL CODES here.

class ProductInfo(BaseModel):

title: str

value: str

score: Non-obligatory[float]

availability: str

description: str

class NewsArticle(BaseModel):

title: str

abstract: str

url: str

date: str

supply: str

class SocialMediaPost(BaseModel):

content material: str

creator: str

likes: int

timestamp: str

platform: str

class SearchResult(BaseModel):

question: str

outcomes: Listing[dict]

total_found: intWe outline structured Pydantic fashions that allow us seize and validate information constantly. With ProductInfo, NewsArticle, SocialMediaPost, and SearchResult, we be sure that the AI agent outputs dependable, well-structured info for merchandise, information articles, social media posts, and search outcomes. Try the FULL CODES here.

class AdvancedNotteAgent:

def __init__(self, headless=True, max_steps=20):

self.headless = headless

self.max_steps = max_steps

self.session = None

self.agent = None

def __enter__(self):

self.session = notte.Session(headless=self.headless)

self.session.__enter__()

self.agent = notte.Agent(

session=self.session,

reasoning_model="gemini/gemini-2.5-flash",

max_steps=self.max_steps

)

return self

def __exit__(self, exc_type, exc_val, exc_tb):

if self.session:

self.session.__exit__(exc_type, exc_val, exc_tb)

def research_product(self, product_name: str, web site: str = "amazon.com") -> ProductInfo:

"""Analysis a product and extract structured info"""

process = f"Go to {web site}, seek for '{product_name}', click on on the primary related product, and extract detailed product info together with title, value, score, availability, and outline."

response = self.agent.run(

process=process,

response_format=ProductInfo,

url=f"https://{web site}"

)

return response.reply

def news_aggregator(self, subject: str, num_articles: int = 3) -> Listing[NewsArticle]:

"""Mixture information articles on a selected subject"""

process = f"Seek for latest information about '{subject}', discover {num_articles} related articles, and extract title, abstract, URL, date, and supply for every."

response = self.agent.run(

process=process,

url="https://information.google.com",

response_format=Listing[NewsArticle]

)

return response.reply

def social_media_monitor(self, hashtag: str, platform: str = "twitter") -> Listing[SocialMediaPost]:

"""Monitor social media for particular hashtags"""

if platform.decrease() == "twitter":

url = "https://twitter.com"

elif platform.decrease() == "reddit":

url = "https://reddit.com"

else:

url = f"https://{platform}.com"

process = f"Go to {platform}, seek for posts with hashtag '{hashtag}', and extract content material, creator, engagement metrics, and timestamps from the highest 5 posts."

response = self.agent.run(

process=process,

url=url,

response_format=Listing[SocialMediaPost]

)

return response.reply

def competitive_analysis(self, firm: str, rivals: Listing[str]) -> dict:

"""Carry out aggressive evaluation by gathering pricing and have information"""

outcomes = {}

for competitor in [company] + rivals:

process = f"Go to {competitor}'s web site, discover their pricing web page or essential product web page, and extract key options, pricing tiers, and distinctive promoting factors."

strive:

response = self.agent.run(

process=process,

url=f"https://{competitor}.com"

)

outcomes[competitor] = response.reply

time.sleep(2)

besides Exception as e:

outcomes[competitor] = f"Error: {str(e)}"

return outcomes

def job_market_scanner(self, job_title: str, location: str = "distant") -> Listing[dict]:

"""Scan job marketplace for alternatives"""

process = f"Seek for '{job_title}' jobs in '{location}', extract job titles, firms, wage ranges, and software URLs from the primary 10 outcomes."

response = self.agent.run(

process=process,

url="https://certainly.com"

)

return response.reply

def price_comparison(self, product: str, web sites: Listing[str]) -> dict:

"""Examine costs throughout a number of web sites"""

price_data = {}

for web site in web sites:

process = f"Seek for '{product}' on this web site and discover the most effective value, together with any reductions or particular presents."

strive:

response = self.agent.run(

process=process,

url=f"https://{web site}"

)

price_data[site] = response.reply

time.sleep(1)

besides Exception as e:

price_data[site] = f"Error: {str(e)}"

return price_data

def content_research(self, subject: str, content_type: str = "weblog") -> dict:

"""Analysis content material concepts and trending matters"""

if content_type == "weblog":

url = "https://medium.com"

process = f"Seek for '{subject}' articles, analyze trending content material, and determine in style themes, engagement patterns, and content material gaps."

elif content_type == "video":

url = "https://youtube.com"

process = f"Seek for '{subject}' movies, analyze view counts, titles, and descriptions to determine trending codecs and in style angles."

else:

url = "https://google.com"

process = f"Seek for '{subject}' content material throughout the online and analyze trending discussions and in style codecs."

response = self.agent.run(process=process, url=url)

return {"subject": subject, "insights": response.reply, "platform": content_type}We wrap Notte in a context-managed AdvancedNotteAgent that units up a headless browser session and a Gemini-powered reasoning mannequin, permitting us to automate multi-step internet duties reliably. We then add high-level strategies, together with product analysis, information aggregation, social listening, aggressive scans, job search, value comparability, and content material analysis, that return clear, structured outputs. This lets us script real-world internet workflows whereas protecting the interface easy and constant. Try the FULL CODES here.

def demo_ecommerce_research():

"""Demo: E-commerce product analysis and comparability"""

print("🛍️ E-commerce Analysis Demo")

print("=" * 50)

with AdvancedNotteAgent(headless=True) as agent:

product = agent.research_product("wi-fi earbuds", "amazon.com")

print(f"Product Analysis Outcomes:")

print(f"Title: {product.title}")

print(f"Worth: {product.value}")

print(f"Score: {product.score}")

print(f"Availability: {product.availability}")

print(f"Description: {product.description[:100]}...")

print("n💰 Worth Comparability:")

web sites = ["amazon.com", "ebay.com", "walmart.com"]

costs = agent.price_comparison("wi-fi earbuds", web sites)

for web site, information in costs.objects():

print(f"{web site}: {information}")

def demo_news_intelligence():

"""Demo: Information aggregation and evaluation"""

print("📰 Information Intelligence Demo")

print("=" * 50)

with AdvancedNotteAgent() as agent:

articles = agent.news_aggregator("synthetic intelligence", 3)

for i, article in enumerate(articles, 1):

print(f"nArticle {i}:")

print(f"Title: {article.title}")

print(f"Supply: {article.supply}")

print(f"Abstract: {article.abstract}")

print(f"URL: {article.url}")

def demo_social_listening():

"""Demo: Social media monitoring and sentiment evaluation"""

print("👂 Social Media Listening Demo")

print("=" * 50)

with AdvancedNotteAgent() as agent:

posts = agent.social_media_monitor("#AI", "reddit")

for i, submit in enumerate(posts, 1):

print(f"nPost {i}:")

print(f"Writer: {submit.creator}")

print(f"Content material: {submit.content material[:100]}...")

print(f"Engagement: {submit.likes} likes")

print(f"Platform: {submit.platform}")

def demo_market_intelligence():

"""Demo: Aggressive evaluation and market analysis"""

print("📊 Market Intelligence Demo")

print("=" * 50)

with AdvancedNotteAgent() as agent:

firm = "openai"

rivals = ["anthropic", "google"]

evaluation = agent.competitive_analysis(firm, rivals)

for comp, information in evaluation.objects():

print(f"n{comp.higher()}:")

print(f"Evaluation: {str(information)[:200]}...")

def demo_job_market_analysis():

"""Demo: Job market scanning and evaluation"""

print("💼 Job Market Evaluation Demo")

print("=" * 50)

with AdvancedNotteAgent() as agent:

jobs = agent.job_market_scanner("python developer", "san francisco")

print(f"Discovered {len(jobs)} job alternatives:")

for job in jobs[:3]:

print(f"- {job}")

def demo_content_strategy():

"""Demo: Content material analysis and pattern evaluation"""

print("✍️ Content material Technique Demo")

print("=" * 50)

with AdvancedNotteAgent() as agent:

blog_research = agent.content_research("machine studying", "weblog")

video_research = agent.content_research("machine studying", "video")

print("Weblog Content material Insights:")

print(blog_research["insights"][:300] + "...")

print("nVideo Content material Insights:")

print(video_research["insights"][:300] + "...")We run a collection of demos that showcase actual internet automation end-to-end, together with researching merchandise and evaluating costs, aggregating contemporary information, and monitoring social chatter. We additionally conduct aggressive scans, analyze the job market, and observe weblog/video developments, yielding structured, ready-to-use insights from every process. Try the FULL CODES here.

class WorkflowManager:

def __init__(self):

self.brokers = []

self.outcomes = {}

def add_agent_task(self, title: str, task_func, *args, **kwargs):

"""Add an agent process to the workflow"""

self.brokers.append({

'title': title,

'func': task_func,

'args': args,

'kwargs': kwargs

})

def execute_workflow(self, parallel=False):

"""Execute all agent duties within the workflow"""

print("🚀 Executing Multi-Agent Workflow")

print("=" * 50)

for agent_task in self.brokers:

title = agent_task['name']

func = agent_task['func']

args = agent_task['args']

kwargs = agent_task['kwargs']

print(f"n🤖 Executing {title}...")

strive:

consequence = func(*args, **kwargs)

self.outcomes[name] = consequence

print(f"✅ {title} accomplished efficiently")

besides Exception as e:

self.outcomes[name] = f"Error: {str(e)}"

print(f"❌ {title} failed: {str(e)}")

if not parallel:

time.sleep(2)

return self.outcomes

def market_research_workflow(company_name: str, product_category: str):

"""Full market analysis workflow"""

workflow = WorkflowManager()

workflow.add_agent_task(

"Product Analysis",

lambda: research_trending_products(product_category)

)

workflow.add_agent_task(

"Aggressive Evaluation",

lambda: analyze_competitors(company_name, product_category)

)

workflow.add_agent_task(

"Social Sentiment",

lambda: monitor_brand_sentiment(company_name)

)

return workflow.execute_workflow()

def research_trending_products(class: str):

"""Analysis trending merchandise in a class"""

with AdvancedNotteAgent(headless=True) as agent:

process = f"Analysis trending {class} merchandise, discover high 5 merchandise with costs, scores, and key options."

response = agent.agent.run(

process=process,

url="https://amazon.com"

)

return response.reply

def analyze_competitors(firm: str, class: str):

"""Analyze rivals out there"""

with AdvancedNotteAgent(headless=True) as agent:

process = f"Analysis {firm} rivals in {class}, evaluate pricing methods, options, and market positioning."

response = agent.agent.run(

process=process,

url="https://google.com"

)

return response.reply

def monitor_brand_sentiment(model: str):

"""Monitor model sentiment throughout platforms"""

with AdvancedNotteAgent(headless=True) as agent:

process = f"Seek for latest mentions of {model} on social media and information, analyze sentiment and key themes."

response = agent.agent.run(

process=process,

url="https://reddit.com"

)

return response.replyWe design a WorkflowManager that chains a number of AI agent duties right into a single orchestrated pipeline. By including modular duties like product analysis, competitor evaluation, and sentiment monitoring, we are able to execute an entire market analysis workflow in sequence (or parallel). This transforms particular person demos right into a coordinated multi-agent system that gives holistic insights for knowledgeable real-world decision-making. Try the FULL CODES here.

def essential():

"""Principal perform to run all demos"""

print("🚀 Superior Notte AI Agent Tutorial")

print("=" * 60)

print("Observe: Make sure that to set your GEMINI_API_KEY above!")

print("Get your free API key at: https://makersuite.google.com/app/apikey")

print("=" * 60)

if GEMINI_API_KEY == "YOUR_GEMINI_API_KEY":

print("❌ Please set your GEMINI_API_KEY within the code above!")

return

strive:

print("n1. E-commerce Analysis Demo")

demo_ecommerce_research()

print("n2. Information Intelligence Demo")

demo_news_intelligence()

print("n3. Social Media Listening Demo")

demo_social_listening()

print("n4. Market Intelligence Demo")

demo_market_intelligence()

print("n5. Job Market Evaluation Demo")

demo_job_market_analysis()

print("n6. Content material Technique Demo")

demo_content_strategy()

print("n7. Multi-Agent Workflow Demo")

outcomes = market_research_workflow("Tesla", "electrical autos")

print("Workflow Outcomes:")

for process, lead to outcomes.objects():

print(f"{process}: {str(consequence)[:150]}...")

besides Exception as e:

print(f"❌ Error throughout execution: {str(e)}")

print("💡 Tip: Make sure that your Gemini API secret's legitimate and you've got web connection")

def quick_scrape(url: str, directions: str = "Extract essential content material"):

"""Fast scraping perform for easy information extraction"""

with AdvancedNotteAgent(headless=True, max_steps=5) as agent:

response = agent.agent.run(

process=f"{directions} from this webpage",

url=url

)

return response.reply

def quick_search(question: str, num_results: int = 5):

"""Fast search perform with structured outcomes"""

with AdvancedNotteAgent(headless=True, max_steps=10) as agent:

process = f"Seek for '{question}' and return the highest {num_results} outcomes with titles, URLs, and temporary descriptions."

response = agent.agent.run(

process=process,

url="https://google.com",

response_format=SearchResult

)

return response.reply

def quick_form_fill(form_url: str, form_data: dict):

"""Fast type filling perform"""

with AdvancedNotteAgent(headless=False, max_steps=15) as agent:

data_str = ", ".be part of([f"{k}: {v}" for k, v in form_data.items()])

process = f"Fill out the shape with this info: {data_str}, then submit it."

response = agent.agent.run(

process=process,

url=form_url

)

return response.reply

if __name__ == "__main__":

print("🧪 Fast Take a look at Examples:")

print("=" * 30)

print("1. Fast Scrape Instance:")

strive:

consequence = quick_scrape("https://information.ycombinator.com", "Extract the highest 3 submit titles")

print(f"Scraped: {consequence}")

besides Exception as e:

print(f"Error: {e}")

print("n2. Fast Search Instance:")

strive:

search_results = quick_search("newest AI information", 3)

print(f"Search Outcomes: {search_results}")

besides Exception as e:

print(f"Error: {e}")

print("n3. Customized Agent Activity:")

strive:

with AdvancedNotteAgent(headless=True) as agent:

response = agent.agent.run(

process="Go to Wikipedia, seek for 'synthetic intelligence', and summarize the principle article in 2 sentences.",

url="https://wikipedia.org"

)

print(f"Wikipedia Abstract: {response.reply}")

besides Exception as e:

print(f"Error: {e}")

essential()

print("n✨ Tutorial Full!")

print("💡 Suggestions for fulfillment:")

print("- Begin with easy duties and step by step enhance complexity")

print("- Use structured outputs (Pydantic fashions) for dependable information extraction")

print("- Implement charge limiting to respect API quotas")

print("- Deal with errors gracefully in manufacturing workflows")

print("- Mix scripting with AI for cost-effective automation")

print("n🚀 Subsequent Steps:")

print("- Customise the brokers in your particular use instances")

print("- Add error dealing with and retry logic for manufacturing")

print("- Implement logging and monitoring for agent actions")

print("- Scale up with Notte's hosted API service for enterprise options")We wrap all the pieces with a essential() perform that runs all demos end-to-end, after which add fast helper utilities, together with quick_scrape, quick_search, and quick_form_fill, to carry out targeted duties with minimal setup. We additionally embody fast exams to validate the helpers and a customized Wikipedia process earlier than invoking the complete workflow, making certain we are able to iterate quick whereas nonetheless exercising the entire agent pipeline.

In conclusion, the tutorial demonstrates how Notte, when mixed with Gemini, can evolve into a strong, multi-purpose AI internet agent for analysis, monitoring, and evaluation. It not solely demonstrates particular person demos for e-commerce, information, and social media but additionally scales into superior multi-agent workflows that mix insights throughout domains. By following this information, builders can shortly prototype AI brokers in Colab, lengthen them with customized duties, and adapt the system for enterprise intelligence, automation, and artistic use instances.

Try the FULL CODES here. Be happy to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.