How do you construct a language mannequin that grows in capability however retains the computation for every token virtually unchanged? The Inclusion AI team from the Ant Group is pushing sparse massive fashions in a methodical manner by releasing Ling 2.0. Ling 2.0 is a reasoning based language model family constructed on the concept every activation ought to translate straight into stronger reasoning conduct. It is among the newest approaches that reveals learn how to hold activation small whereas transferring from 16B to 1T without rewriting the recipe. The sequence has three variations, Ling mini 2.0 at 16B complete with 1.4B activated, Ling flash 2.0 within the 100B class with 6.1B activated, and Ling 1T with 1T complete and about 50B lively per token.

Sparse MoE because the central design

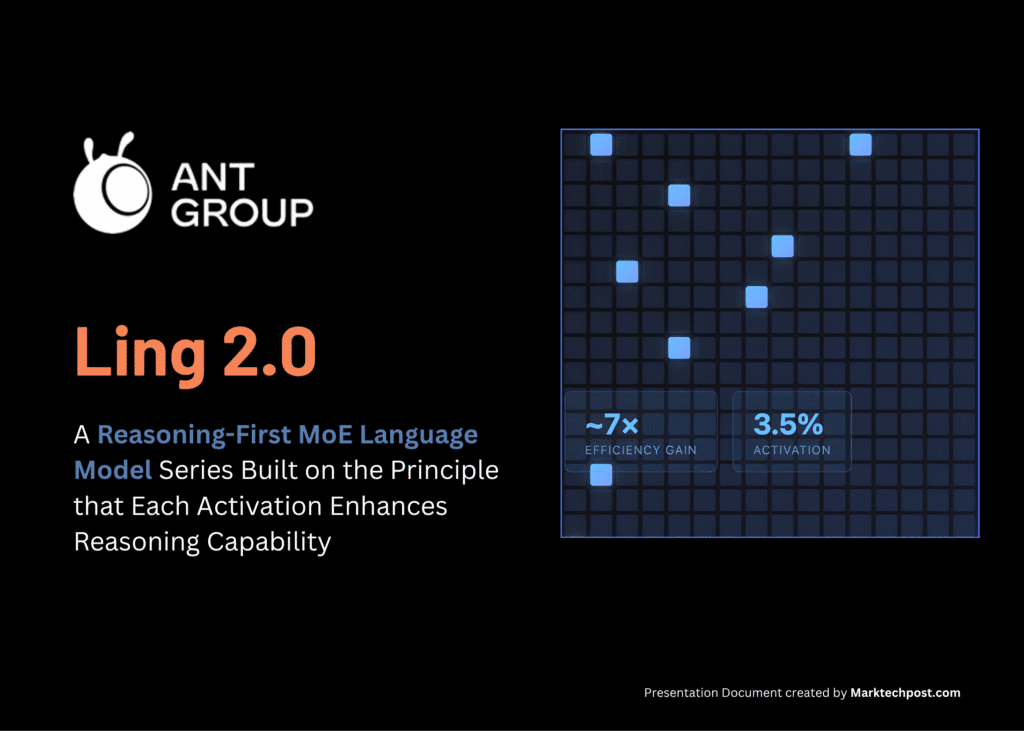

Each Ling 2.0 model makes use of the identical sparse Mixture of Experts layer. Every layer has 256 routed consultants and one shared knowledgeable. The router picks 8 routed consultants for each token, the shared knowledgeable is all the time on, so about 9 consultants out of 257 are used for each token, that is about 3.5 p.c activation, which matches the 1/32 activation ratio. The analysis crew reviews about 7 occasions effectivity in comparison with an equal dense mannequin since you prepare and serve solely a small a part of the community per token whereas holding a really massive parameter pool.

Ling 2.0 brings coordinated advances throughout 4 layers of the stack, mannequin structure, pre coaching, publish coaching, and the underlying FP8 infrastructure:

Mannequin structure: The architecture is chosen using Ling Scaling Laws, not by trial and error. To help the Ling Scaling Legal guidelines, the crew runs what they name the Ling Wind Tunnel, a set set of small MoE runs skilled below the identical information and routing guidelines, then fitted to energy legal guidelines to foretell loss, activation and knowledgeable steadiness at a lot bigger sizes. This offers them a low value manner to decide on 1/32 activation, 256 routed experts and 1 shared expert before committing GPUs to 1T scale. Routing is aux-loss-free with sigmoid scoring, and the stack makes use of QK Norm, MTP loss and partial RoPE to maintain depth secure. As a result of the identical regulation picked the form, Ling mini 2.0, Ling flash 2.0 and Ling 1T can all share the consistency throughout sizes.

Pre coaching: The sequence is skilled on greater than 20T tokens, beginning with 4K context and a combination through which reasoning heavy sources similar to math and code steadily improve to virtually half of the corpus. A later mid coaching stage extends context to about 32K on a particular 150B token slice, then injects one other 600B tokens of top of the range chain of thought, earlier than lastly stretching to 128K with YaRN whereas preserving brief context high quality. This pipeline ensures that lengthy context and reasoning are launched early, not simply added on the SFT step.

Put up coaching: Alignment is separated right into a functionality cross and a choice cross. First, Decoupled Tremendous Tuning teaches the mannequin to change between fast responses and deep reasoning by means of totally different system prompts, then an evolutionary CoT stage expands and diversifies chains, and at last a sentence degree coverage optimization with a Group Area Reward aligns outputs to human judgments at effective granularity. This staged alignment is what lets a non considering base attain sturdy math, code and instruction efficiency with out inflating each reply.

Infrastructure: Ling 2.0 trains natively in FP8 with safeguards, holding the loss curve inside a small hole of BF16 whereas gaining about 15% utilization on the reported {hardware}. The bigger speedups, round 40 p.c, come from heterogeneous pipeline parallelism, interleaved one ahead one backward execution and partitioning that’s conscious of the MTP block, not from precision alone. Along with Warmup Steady Merge, which replaces LR decay by merging checkpoints, this methods stack makes 1T scale runs sensible on present clusters.

Understanding the Outcomes

Evaluations are constant in sample, small activation MoE models ship aggressive high quality whereas holding per token compute low. Ling mini 2.0 has 16B complete parameters, prompts 1.4B per token, and is reported to carry out within the 7 to 8B dense band. (Reddit) Ling flash 2.0 retains the identical 1/32 activation recipe, has 100B and prompts 6.1B per token. Ling 1T is the flagship non considering mannequin, it has 1T complete parameters and about 50B lively per token, preserving the 1/32 sparsity and increasing the identical Ling Scaling Legal guidelines to trillion scale.

Key Takeaways

- Ling 2.0 is constructed round a 1/32 activation MoE structure, chosen utilizing Ling Scaling Legal guidelines in order that 256 routed consultants plus 1 shared knowledgeable keep optimum from 16B as much as 1T.

- Ling mini 2.0 has 16B complete parameters with 1.4B activated per token and is reported to match 7B to 8B dense fashions whereas producing at greater than 300 tokens per second in easy QA on H20.

- Ling flash 2.0 retains the identical recipe, has 6.1B lively parameters and sits within the 100B vary, giving a better capability possibility with out growing per token compute.

- Ling 1T exposes the complete design, 1T complete parameters with about 50B lively per token, 128K context, and an Evo CoT plus LPO fashion publish coaching stack to push environment friendly reasoning.

- Throughout all sizes, effectivity good points above 7 times over dense baselines come from the mixture of sparse activation, FP8 coaching, and a shared coaching schedule, so high quality scales predictably with out re tuning compute.

This release demonstrates an entire sparse MoE stack. Ling Scaling Legal guidelines determine a 1/32 activation as optimum, the structure locks in 256 routed consultants plus 1 shared knowledgeable, and the identical form is used from 16B to 1T. Coaching, context extension and choice optimization are all aligned to that selection, so small activation doesn’t block math, code or lengthy context, and FP8 plus heterogeneous pipelines hold value in a sensible vary. It’s a clear sign that trillion scale reasoning may be organized round fastened sparsity as an alternative of rising dense compute.

Try the Weights on HF, Repo and Paper. Be at liberty to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.