On this tutorial, we mix the analytical energy of XGBoost with the conversational intelligence of LangChain. We construct an end-to-end pipeline that may generate artificial datasets, prepare an XGBoost mannequin, consider its efficiency, and visualize key insights, all orchestrated by modular LangChain instruments. By doing this, we display how conversational AI can work together seamlessly with machine studying workflows, enabling an agent to intelligently handle all the ML lifecycle in a structured and human-like method. By way of this course of, we expertise how the combination of reasoning-driven automation could make machine studying each interactive and explainable. Try the FULL CODES here.

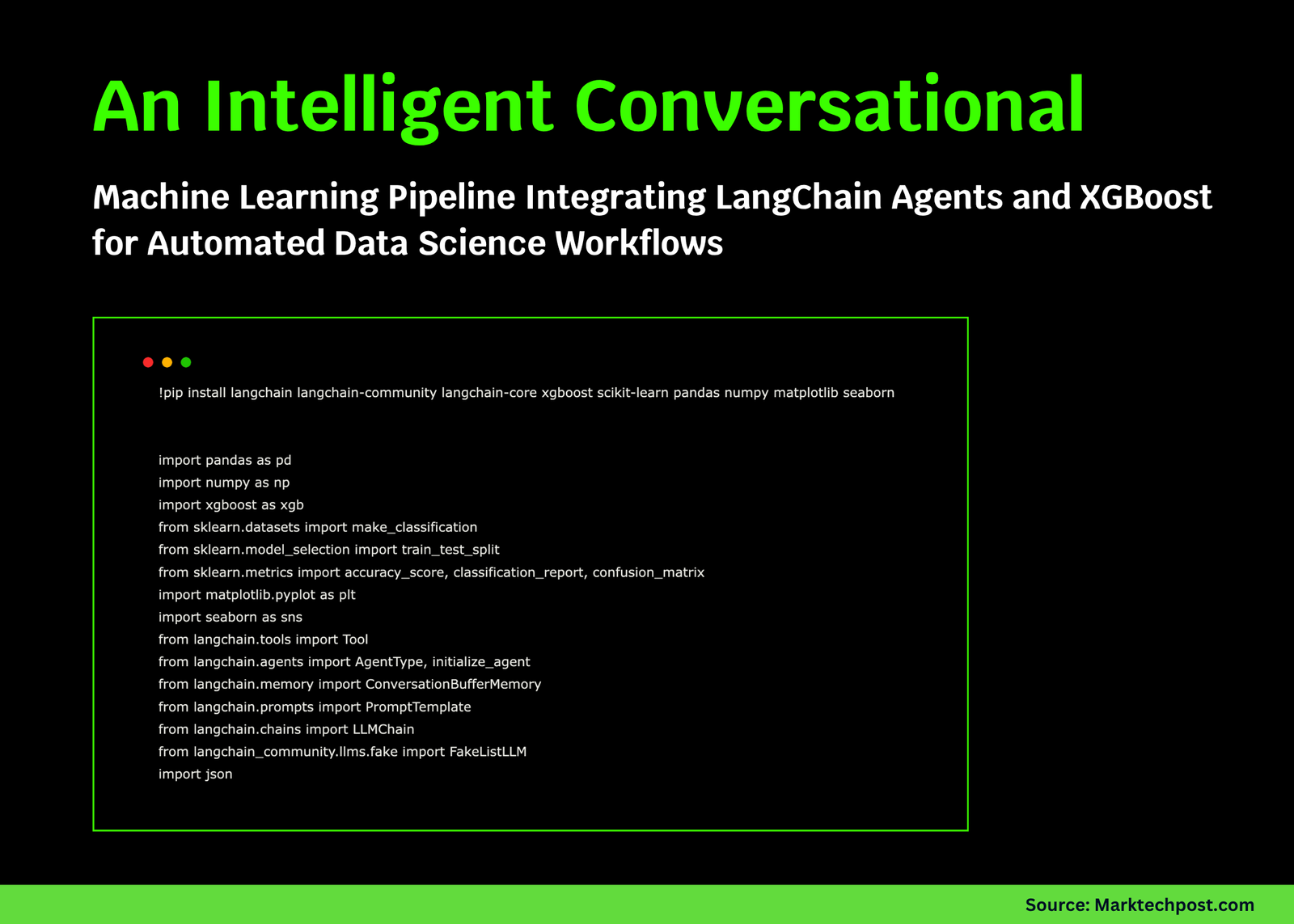

!pip set up langchain langchain-community langchain-core xgboost scikit-learn pandas numpy matplotlib seaborn

import pandas as pd

import numpy as np

import xgboost as xgb

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, classification_report, confusion_matrix

import matplotlib.pyplot as plt

import seaborn as sns

from langchain.instruments import Device

from langchain.brokers import AgentType, initialize_agent

from langchain.reminiscence import ConversationBufferMemory

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

from langchain_community.llms.pretend import FakeListLLM

import jsonWe start by putting in and importing all of the important libraries required for this tutorial. We use LangChain for agentic AI integration, XGBoost and scikit-learn for machine studying, and Pandas, NumPy, and Seaborn for knowledge dealing with and visualization. Try the FULL CODES here.

class DataManager:

"""Manages dataset era and preprocessing"""

def __init__(self, n_samples=1000, n_features=20, random_state=42):

self.n_samples = n_samples

self.n_features = n_features

self.random_state = random_state

self.X_train, self.X_test, self.y_train, self.y_test = None, None, None, None

self.feature_names = [f'feature_{i}' for i in range(n_features)]

def generate_data(self):

"""Generate artificial classification dataset"""

X, y = make_classification(

n_samples=self.n_samples,

n_features=self.n_features,

n_informative=15,

n_redundant=5,

random_state=self.random_state

)

self.X_train, self.X_test, self.y_train, self.y_test = train_test_split(

X, y, test_size=0.2, random_state=self.random_state

)

return f"Dataset generated: {self.X_train.form[0]} prepare samples, {self.X_test.form[0]} check samples"

def get_data_summary(self):

"""Return abstract statistics of the dataset"""

if self.X_train is None:

return "No knowledge generated but. Please generate knowledge first."

abstract = {

"train_samples": self.X_train.form[0],

"test_samples": self.X_test.form[0],

"options": self.X_train.form[1],

"class_distribution": {

"prepare": {0: int(np.sum(self.y_train == 0)), 1: int(np.sum(self.y_train == 1))},

"check": {0: int(np.sum(self.y_test == 0)), 1: int(np.sum(self.y_test == 1))}

}

}

return json.dumps(abstract, indent=2)We outline the DataManager class to deal with dataset era and preprocessing duties. Right here, we create artificial classification knowledge utilizing scikit-learn’s make_classification perform, cut up it into coaching and testing units, and generate a concise abstract containing pattern counts, function dimensions, and sophistication distributions. Try the FULL CODES here.

class XGBoostManager:

"""Manages XGBoost mannequin coaching and analysis"""

def __init__(self):

self.mannequin = None

self.predictions = None

self.accuracy = None

self.feature_importance = None

def train_model(self, X_train, y_train, params=None):

"""Practice XGBoost classifier"""

if params is None:

params = {

'max_depth': 6,

'learning_rate': 0.1,

'n_estimators': 100,

'goal': 'binary:logistic',

'random_state': 42

}

self.mannequin = xgb.XGBClassifier(**params)

self.mannequin.match(X_train, y_train)

return f"Mannequin educated efficiently with {params['n_estimators']} estimators"

def evaluate_model(self, X_test, y_test):

"""Consider mannequin efficiency"""

if self.mannequin is None:

return "No mannequin educated but. Please prepare mannequin first."

self.predictions = self.mannequin.predict(X_test)

self.accuracy = accuracy_score(y_test, self.predictions)

report = classification_report(y_test, self.predictions, output_dict=True)

outcome = {

"accuracy": float(self.accuracy),

"precision": float(report['1']['precision']),

"recall": float(report['1']['recall']),

"f1_score": float(report['1']['f1-score'])

}

return json.dumps(outcome, indent=2)

def get_feature_importance(self, feature_names, top_n=10):

"""Get prime N most essential options"""

if self.mannequin is None:

return "No mannequin educated but."

significance = self.mannequin.feature_importances_

feature_imp_df = pd.DataFrame({

'function': feature_names,

'significance': significance

}).sort_values('significance', ascending=False)

return feature_imp_df.head(top_n).to_string()

def visualize_results(self, X_test, y_test, feature_names):

"""Create visualizations for mannequin outcomes"""

if self.mannequin is None:

print("No mannequin educated but.")

return

fig, axes = plt.subplots(2, 2, figsize=(15, 12))

cm = confusion_matrix(y_test, self.predictions)

sns.heatmap(cm, annot=True, fmt="d", cmap='Blues', ax=axes[0, 0])

axes[0, 0].set_title('Confusion Matrix')

axes[0, 0].set_ylabel('True Label')

axes[0, 0].set_xlabel('Predicted Label')

significance = self.mannequin.feature_importances_

indices = np.argsort(significance)[-10:]

axes[0, 1].barh(vary(10), significance[indices])

axes[0, 1].set_yticks(vary(10))

axes[0, 1].set_yticklabels([feature_names[i] for i in indices])

axes[0, 1].set_title('High 10 Characteristic Importances')

axes[0, 1].set_xlabel('Significance')

axes[1, 0].hist([y_test, self.predictions], label=['True', 'Predicted'], bins=2)

axes[1, 0].set_title('True vs Predicted Distribution')

axes[1, 0].legend()

axes[1, 0].set_xticks([0, 1])

train_sizes = [0.2, 0.4, 0.6, 0.8, 1.0]

train_scores = [0.7, 0.8, 0.85, 0.88, 0.9]

axes[1, 1].plot(train_sizes, train_scores, marker="o")

axes[1, 1].set_title('Studying Curve (Simulated)')

axes[1, 1].set_xlabel('Coaching Set Measurement')

axes[1, 1].set_ylabel('Accuracy')

axes[1, 1].grid(True)

plt.tight_layout()

plt.present()We implement XGBoostManager to coach, consider, and interpret our classifier end-to-end. We match an XGBClassifier, compute accuracy and per-class metrics, extract prime function importances, and visualize the outcomes utilizing a confusion matrix, significance chart, distribution comparability, and a easy studying curve view. Try the FULL CODES here.

def create_ml_agent(data_manager, xgb_manager):

"""Create LangChain agent with ML instruments"""

instruments = [

Tool(

name="GenerateData",

func=lambda x: data_manager.generate_data(),

description="Generate synthetic dataset for training. No input needed."

),

Tool(

name="DataSummary",

func=lambda x: data_manager.get_data_summary(),

description="Get summary statistics of the dataset. No input needed."

),

Tool(

name="TrainModel",

func=lambda x: xgb_manager.train_model(

data_manager.X_train, data_manager.y_train

),

description="Train XGBoost model on the dataset. No input needed."

),

Tool(

name="EvaluateModel",

func=lambda x: xgb_manager.evaluate_model(

data_manager.X_test, data_manager.y_test

),

description="Evaluate trained model performance. No input needed."

),

Tool(

name="FeatureImportance",

func=lambda x: xgb_manager.get_feature_importance(

data_manager.feature_names, top_n=10

),

description="Get top 10 most important features. No input needed."

)

]

return instrumentsWe outline the create_ml_agent perform to combine machine studying duties into the LangChain ecosystem. Right here, we wrap key operations, knowledge era, summarization, mannequin coaching, analysis, and have evaluation into LangChain instruments, enabling a conversational agent to carry out end-to-end ML workflows seamlessly by pure language directions. Try the FULL CODES here.

def run_tutorial():

"""Execute the whole tutorial"""

print("=" * 80)

print("ADVANCED LANGCHAIN + XGBOOST TUTORIAL")

print("=" * 80)

data_mgr = DataManager(n_samples=1000, n_features=20)

xgb_mgr = XGBoostManager()

instruments = create_ml_agent(data_mgr, xgb_mgr)

print("n1. Producing Dataset...")

outcome = instruments[0].func("")

print(outcome)

print("n2. Dataset Abstract:")

abstract = instruments[1].func("")

print(abstract)

print("n3. Coaching XGBoost Mannequin...")

train_result = instruments[2].func("")

print(train_result)

print("n4. Evaluating Mannequin:")

eval_result = instruments[3].func("")

print(eval_result)

print("n5. High Characteristic Importances:")

significance = instruments[4].func("")

print(significance)

print("n6. Producing Visualizations...")

xgb_mgr.visualize_results(

data_mgr.X_test,

data_mgr.y_test,

data_mgr.feature_names

)

print("n" + "=" * 80)

print("TUTORIAL COMPLETE!")

print("=" * 80)

print("nKey Takeaways:")

print("- LangChain instruments can wrap ML operations")

print("- XGBoost gives highly effective gradient boosting")

print("- Agent-based strategy allows conversational ML pipelines")

print("- Simple integration with present ML workflows")

if __name__ == "__main__":

run_tutorial()We orchestrate the total workflow with run_tutorial(), the place we generate knowledge, prepare and consider the XGBoost mannequin, and floor function importances. We then visualize the outcomes and print key takeaways, permitting us to interactively expertise an end-to-end, conversational ML pipeline.

In conclusion, we created a totally purposeful ML pipeline that blends LangChain’s tool-based agentic framework with the XGBoost classifier’s predictive power. We see how LangChain can function a conversational interface for performing complicated ML operations equivalent to knowledge era, mannequin coaching, and analysis, all in a logical and guided method. This hands-on walkthrough helps us recognize how combining LLM-powered orchestration with machine studying can simplify experimentation, improve interpretability, and pave the way in which for extra clever, dialogue-driven knowledge science workflows.

Try the FULL CODES here. Be happy to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.