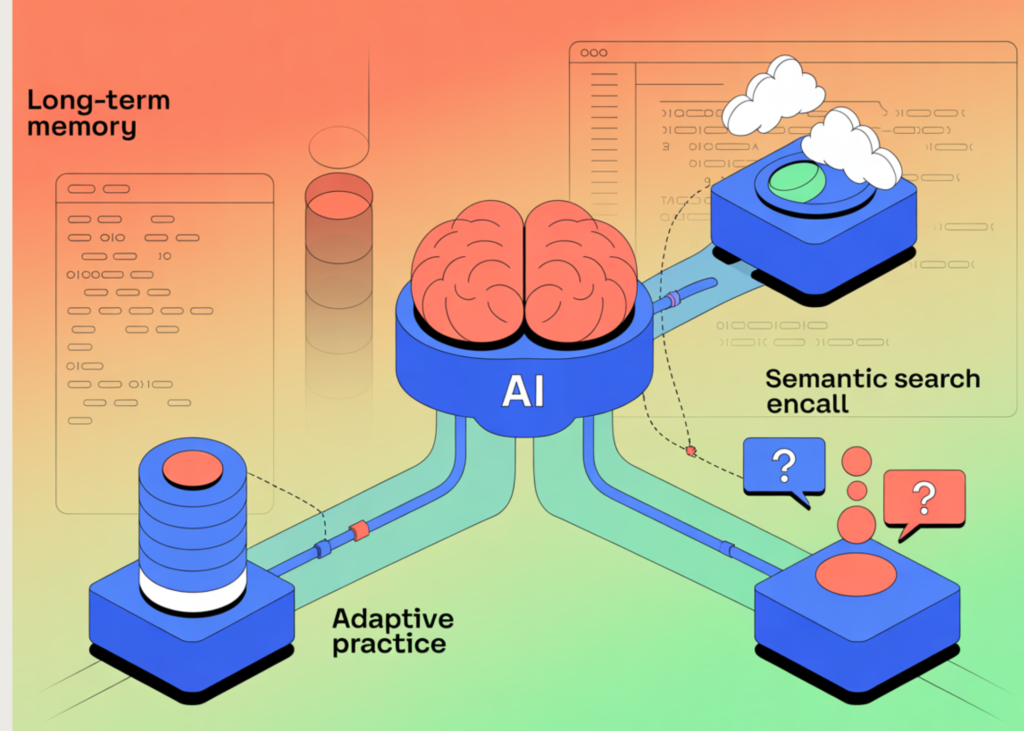

On this tutorial, we construct a totally stateful private tutor agent that strikes past short-lived chat interactions and learns repeatedly over time. We design the system to persist consumer preferences, monitor weak studying areas, and selectively recall solely related previous context when responding. By combining sturdy storage, semantic retrieval, and adaptive prompting, we show how an agent can behave extra like a long-term tutor than a stateless chatbot. Additionally, we deal with protecting the agent self-managed, context-aware, and in a position to enhance its steerage with out requiring the consumer to repeat data.

!pip -q set up "langchain>=0.2.12" "langchain-openai>=0.1.20" "sentence-transformers>=3.0.1" "faiss-cpu>=1.8.0.post1" "pydantic>=2.7.0"

import os, json, sqlite3, uuid

from datetime import datetime, timezone

from typing import Listing, Dict, Any

import numpy as np

import faiss

from pydantic import BaseModel, Subject

from sentence_transformers import SentenceTransformer

from langchain_core.messages import SystemMessage, HumanMessage, AIMessage

from langchain_core.language_models.chat_models import BaseChatModel

from langchain_core.outputs import ChatGeneration, ChatResult

DB_PATH="/content material/tutor_memory.db"

STORE_DIR="/content material/tutor_store"

INDEX_PATH=f"{STORE_DIR}/mem.faiss"

META_PATH=f"{STORE_DIR}/mem_meta.json"

os.makedirs(STORE_DIR, exist_ok=True)

def now(): return datetime.now(timezone.utc).isoformat()

def db(): return sqlite3.join(DB_PATH)

def init_db():

c=db(); cur=c.cursor()

cur.execute("""CREATE TABLE IF NOT EXISTS occasions(

id TEXT PRIMARY KEY,user_id TEXT,session_id TEXT,function TEXT,content material TEXT,ts TEXT)""")

cur.execute("""CREATE TABLE IF NOT EXISTS recollections(

id TEXT PRIMARY KEY,user_id TEXT,form TEXT,content material TEXT,tags TEXT,significance REAL,ts TEXT)""")

cur.execute("""CREATE TABLE IF NOT EXISTS weak_topics(

user_id TEXT,subject TEXT,mastery REAL,last_seen TEXT,notes TEXT,PRIMARY KEY(user_id,subject))""")

c.commit(); c.shut()We arrange the execution atmosphere and import all required libraries for constructing a stateful agent. We additionally outline core paths and utility features for time dealing with and database connections. It establishes the foundational infrastructure that the remainder of the system depends on.

class MemoryItem(BaseModel):

form:str

content material:str

tags:Listing[str]=Subject(default_factory=record)

significance:float=Subject(0.5,ge=0,le=1)

class WeakTopicSignal(BaseModel):

subject:str

sign:str

proof:str

confidence:float=Subject(0.5,ge=0,le=1)

class Extracted(BaseModel):

recollections:Listing[MemoryItem]=Subject(default_factory=record)

weak_topics:Listing[WeakTopicSignal]=Subject(default_factory=record)

class FallbackTutorLLM(BaseChatModel):

@property

def _llm_type(self)->str: return "fallback_tutor"

def _generate(self, messages, cease=None, run_manager=None, **kwargs)->ChatResult:

final=messages[-1].content material if messages else ""

content material=self._respond(final)

return ChatResult(generations=[ChatGeneration(message=AIMessage(content=content))])

def _respond(self, textual content:str)->str:

t=textual content.decrease()

if "extract_memories" in t:

out={"recollections":[],"weak_topics":[]}

if "recursion" in t:

out["weak_topics"].append({"subject":"recursion","sign":"struggled",

"proof":"Consumer signifies problem with recursion.","confidence":0.85})

if "choose" in t or "i like" in t:

out["memories"].append({"form":"choice","content material":"Consumer prefers concise explanations with examples.",

"tags":["style","preference"],"significance":0.55})

return json.dumps(out)

if "generate_practice" in t:

return "n".be a part of([

"Targeted Practice (Recursion):",

"1) Implement factorial(n) recursively, then iteratively.",

"2) Recursively sum a list; state the base case explicitly.",

"3) Recursive binary search; return index or -1.",

"4) Trace fibonacci(6) call tree; count repeated subcalls.",

"5) Recursively reverse a string; discuss time/space.",

"Mini-quiz: Why does missing a base case cause infinite recursion?"

])

return "Inform me what you are finding out and what felt arduous; I’ll keep in mind and adapt observe subsequent time."

def get_llm():

key=os.environ.get("OPENAI_API_KEY","").strip()

if key:

from langchain_openai import ChatOpenAI

return ChatOpenAI(mannequin="gpt-4o-mini",temperature=0.2)

return FallbackTutorLLM()We outline the database schema and initialize persistent storage for occasions, recollections, and weak subjects. We be certain that consumer interactions and long-term studying indicators are saved reliably throughout classes. It permits agent reminiscence to be sturdy past a single run.

EMBED_MODEL="sentence-transformers/all-MiniLM-L6-v2"

embedder=SentenceTransformer(EMBED_MODEL)

def load_meta():

if os.path.exists(META_PATH):

with open(META_PATH,"r") as f: return json.load(f)

return []

def save_meta(meta):

with open(META_PATH,"w") as f: json.dump(meta,f)

def normalize(x):

n=np.linalg.norm(x,axis=1,keepdims=True)+1e-12

return x/n

def load_index(dim):

if os.path.exists(INDEX_PATH): return faiss.read_index(INDEX_PATH)

return faiss.IndexFlatIP(dim)

def save_index(ix): faiss.write_index(ix, INDEX_PATH)

EXTRACTOR_SYSTEM = (

"You're a reminiscence extractor for a stateful private tutor.n"

"Return ONLY JSON with keys: recollections (record of {form,content material,tags,significance}) "

"and weak_topics (record of {subject,sign,proof,confidence}).n"

"Retailer sturdy information solely; don't retailer secrets and techniques."

)

llm=get_llm()

init_db()

dim=embedder.encode(["x"],convert_to_numpy=True).form[1]

ix=load_index(dim)

meta=load_meta()We outline the information fashions and the fallback language mannequin used when no exterior API key’s obtainable. We formalize how recollections and weak-topic indicators are represented and extracted. It permits the agent to persistently convert uncooked conversations into structured, actionable reminiscence.

def log_event(user_id, session_id, function, content material):

c=db(); cur=c.cursor()

cur.execute("INSERT INTO occasions VALUES (?,?,?,?,?,?)",

(str(uuid.uuid4()),user_id,session_id,function,content material,now()))

c.commit(); c.shut()

def upsert_weak(user_id, sig:WeakTopicSignal):

c=db(); cur=c.cursor()

cur.execute("SELECT mastery,notes FROM weak_topics WHERE user_id=? AND subject=?",(user_id,sig.subject))

row=cur.fetchone()

delta=(-0.10 if sig.sign=="struggled" else 0.10 if sig.sign=="improved" else 0.0)*sig.confidence

if row is None:

mastery=float(np.clip(0.5+delta,0,1)); notes=sig.proof

cur.execute("INSERT INTO weak_topics VALUES (?,?,?,?,?)",(user_id,sig.subject,mastery,now(),notes))

else:

mastery=float(np.clip(row[0]+delta,0,1)); notes=(row[1]+" | "+sig.proof)[-2000:]

cur.execute("UPDATE weak_topics SET mastery=?,last_seen=?,notes=? WHERE user_id=? AND subject=?",

(mastery,now(),notes,user_id,sig.subject))

c.commit(); c.shut()

def store_memory(user_id, m:MemoryItem):

mem_id=str(uuid.uuid4())

c=db(); cur=c.cursor()

cur.execute("INSERT INTO recollections VALUES (?,?,?,?,?,?,?)",

(mem_id,user_id,m.form,m.content material,json.dumps(m.tags),float(m.significance),now()))

c.commit(); c.shut()

v=embedder.encode([m.content],convert_to_numpy=True).astype("float32")

v=normalize(v); ix.add(v)

meta.append({"mem_id":mem_id,"user_id":user_id,"form":m.form,"content material":m.content material,

"tags":m.tags,"significance":m.significance,"ts":now()})

save_index(ix); save_meta(meta)We deal with embedding-based semantic reminiscence utilizing vector representations and similarity search. We encode recollections, retailer them in a vector index, and persist metadata for later retrieval. It permits relevance-based recall quite than blindly loading all previous context.

def extract(user_text)->Extracted:

msg="extract_memoriesnnUser message:n"+user_text

r=llm.invoke([SystemMessage(content=EXTRACTOR_SYSTEM),HumanMessage(content=msg)]).content material

strive:

d=json.masses(r)

return Extracted(

recollections=[MemoryItem(**x) for x in d.get("memories",[])],

weak_topics=[WeakTopicSignal(**x) for x in d.get("weak_topics",[])]

)

besides:

return Extracted()

def recall(user_id, question, ok=6):

if ix.ntotal==0: return []

q=embedder.encode([query],convert_to_numpy=True).astype("float32")

q=normalize(q)

scores, idxs = ix.search(q,ok)

out=[]

for s,i in zip(scores[0].tolist(), idxs[0].tolist()):

if i<0 or i>=len(meta): proceed

m=meta[i]

if m["user_id"]!=user_id or s<0.25: proceed

out.append({**m,"rating":float(s)})

out.kind(key=lambda r: r["score"]*(0.6+0.4*r["importance"]), reverse=True)

return out

def weak_snapshot(user_id):

c=db(); cur=c.cursor()

cur.execute("SELECT subject,mastery,last_seen FROM weak_topics WHERE user_id=? ORDER BY mastery ASC LIMIT 5",(user_id,))

rows=cur.fetchall(); c.shut()

return [{"topic":t,"mastery":float(m),"last_seen":ls} for t,m,ls in rows]

def tutor_turn(user_id, session_id, user_text):

log_event(user_id,session_id,"consumer",user_text)

ex=extract(user_text)

for w in ex.weak_topics: upsert_weak(user_id,w)

for m in ex.recollections: store_memory(user_id,m)

rel=recall(user_id,user_text,ok=6)

weak=weak_snapshot(user_id)

immediate={

"recalled_memories":[{"kind":x["kind"],"content material":x["content"],"rating":x["score"]} for x in rel],

"weak_topics":weak,

"user_message":user_text

}

gen = llm.invoke([SystemMessage(content="You are a personal tutor. Use recalled_memories only if relevant."),

HumanMessage(content="generate_practicenn"+json.dumps(prompt))]).content material

log_event(user_id,session_id,"assistant",gen)

return gen, rel, weak

USER_ID="user_demo"

SESSION_ID=str(uuid.uuid4())

print("✅ Prepared. Instance run:n")

ans, rel, weak = tutor_turn(USER_ID, SESSION_ID, "Final week I struggled with recursion. I choose concise explanations.")

print(ans)

print("nRecalled:", [r["content"] for r in rel])

print("Weak subjects:", weak)We orchestrate the complete tutor interplay loop, combining extraction, storage, recall, and response era. We replace mastery scores, retrieve related recollections, and dynamically generate focused observe. It completes the transformation from a stateless chatbot right into a long-term, adaptive tutor.

In conclusion, we carried out a tutor agent that remembers, causes, and adapts throughout classes. We confirmed how structured reminiscence extraction, long-term persistence, and relevance-based recall work collectively to beat the “goldfish reminiscence” limitation frequent in most brokers. The ensuing system repeatedly refines its understanding of a consumer’s weaknesses. It proactively generates focused observe, demonstrating a sensible basis for constructing stateful, long-horizon AI brokers that enhance with sustained interplay.

Take a look at the Full Codes here. Additionally, be happy to observe us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.