On this tutorial, we construct our personal customized GPT-style chat system from scratch utilizing a neighborhood Hugging Face mannequin. We begin by loading a light-weight instruction-tuned mannequin that understands conversational prompts, then wrap it inside a structured chat framework that features a system function, consumer reminiscence, and assistant responses. We outline how the agent interprets context, constructs messages, and optionally makes use of small built-in instruments to fetch native information or simulated search outcomes. By the tip, we have now a completely purposeful, conversational mannequin that behaves like a customized GPT working. Take a look at the FULL CODES here.

!pip set up transformers speed up sentencepiece --quiet

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

from typing import Listing, Tuple, Elective

import textwrap, json, osWe start by putting in the important libraries and importing the required modules. We be sure that the surroundings has all essential dependencies, reminiscent of transformers, torch, and sentencepiece, prepared to be used. This setup permits us to work seamlessly with Hugging Face fashions inside Google Colab. Take a look at the FULL CODES here.

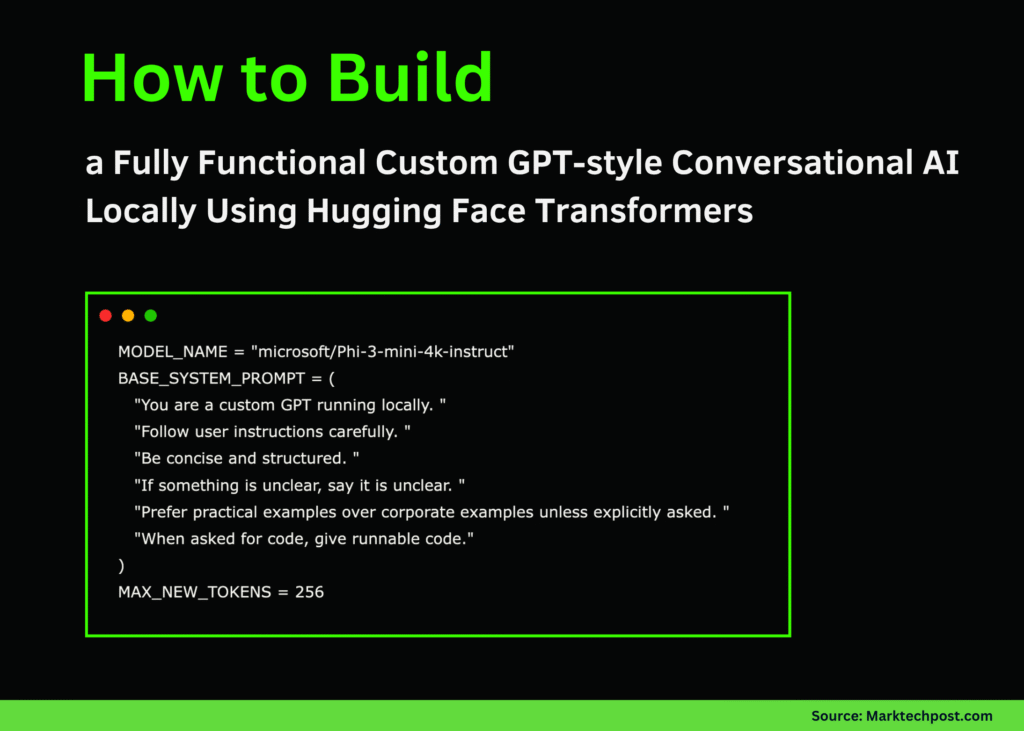

MODEL_NAME = "microsoft/Phi-3-mini-4k-instruct"

BASE_SYSTEM_PROMPT = (

"You're a customized GPT working regionally. "

"Comply with consumer directions rigorously. "

"Be concise and structured. "

"If one thing is unclear, say it's unclear. "

"Desire sensible examples over company examples except explicitly requested. "

"When requested for code, give runnable code."

)

MAX_NEW_TOKENS = 256We configure our mannequin identify, outline the system immediate that governs the assistant’s habits, and set token limits. We set up how our customized GPT ought to reply, concise, structured, and sensible. This part defines the inspiration of our mannequin’s identification and instruction model. Take a look at the FULL CODES here.

print("Loading mannequin...")

tokenizer = AutoTokenizer.from_pretrained(MODEL_NAME)

if tokenizer.pad_token_id is None:

tokenizer.pad_token_id = tokenizer.eos_token_id

mannequin = AutoModelForCausalLM.from_pretrained(

MODEL_NAME,

torch_dtype=torch.float16 if torch.cuda.is_available() else torch.float32,

device_map="auto"

)

mannequin.eval()

print("Mannequin loaded.")We load the tokenizer and mannequin from Hugging Face into reminiscence and put together them for inference. We routinely alter the system mapping based mostly on accessible {hardware}, guaranteeing GPU acceleration if doable. As soon as loaded, our mannequin is able to generate responses. Take a look at the FULL CODES here.

ConversationHistory = Listing[Tuple[str, str]]

historical past: ConversationHistory = [("system", BASE_SYSTEM_PROMPT)]

def wrap_text(s: str, w: int = 100) -> str:

return "n".be part of(textwrap.wrap(s, width=w))

def build_chat_prompt(historical past: ConversationHistory, user_msg: str) -> str:

prompt_parts = []

for function, content material in historical past:

if function == "system":

prompt_parts.append(f"<|system|>n{content material}n")

elif function == "consumer":

prompt_parts.append(f"<|consumer|>n{content material}n")

elif function == "assistant":

prompt_parts.append(f"<|assistant|>n{content material}n")

prompt_parts.append(f"<|consumer|>n{user_msg}n")

prompt_parts.append("<|assistant|>n")

return "".be part of(prompt_parts)We initialize the dialog historical past, beginning with a system function, and create a immediate builder to format messages. We outline how consumer and assistant turns are organized in a constant conversational construction. This ensures the mannequin all the time understands the dialogue context accurately. Take a look at the FULL CODES here.

def local_tool_router(user_msg: str) -> Elective[str]:

msg = user_msg.strip().decrease()

if msg.startswith("search:"):

question = user_msg.break up(":", 1)[-1].strip()

return f"Search outcomes about '{question}':n- Key level 1n- Key level 2n- Key level 3"

if msg.startswith("docs:"):

subject = user_msg.break up(":", 1)[-1].strip()

return f"Documentation extract on '{subject}':n1. The agent orchestrates instruments.n2. The mannequin consumes output.n3. Responses grow to be reminiscence."

return NoneWe add a light-weight instrument router that extends our GPT’s functionality to simulate duties like search or documentation retrieval. We outline logic to detect particular prefixes reminiscent of “search:” or “docs:” in consumer queries. This easy agentic design provides our assistant contextual consciousness. Take a look at the FULL CODES here.

def generate_reply(historical past: ConversationHistory, user_msg: str) -> str:

tool_context = local_tool_router(user_msg)

if tool_context:

user_msg = user_msg + "nnUseful context:n" + tool_context

immediate = build_chat_prompt(historical past, user_msg)

inputs = tokenizer(immediate, return_tensors="pt").to(mannequin.system)

with torch.no_grad():

output_ids = mannequin.generate(

**inputs,

max_new_tokens=MAX_NEW_TOKENS,

do_sample=True,

top_p=0.9,

temperature=0.6,

pad_token_id=tokenizer.eos_token_id

)

decoded = tokenizer.decode(output_ids[0], skip_special_tokens=True)

reply = decoded.break up("<|assistant|>")[-1].strip() if "<|assistant|>" in decoded else decoded[len(prompt):].strip()

historical past.append(("consumer", user_msg))

historical past.append(("assistant", reply))

return reply

def save_history(historical past: ConversationHistory, path: str = "chat_history.json") -> None:

information = [{"role": r, "content": c} for (r, c) in history]

with open(path, "w") as f:

json.dump(information, f, indent=2)

def load_history(path: str = "chat_history.json") -> ConversationHistory:

if not os.path.exists(path):

return [("system", BASE_SYSTEM_PROMPT)]

with open(path, "r") as f:

information = json.load(f)

return [(item["role"], merchandise["content"]) for merchandise in information]We outline the first reply technology operate, which mixes historical past, context, and mannequin inference to provide coherent outputs. We additionally add capabilities to save lots of and cargo previous conversations for persistence. This snippet kinds the operational core of our customized GPT. Take a look at the FULL CODES here.

print("n--- Demo flip 1 ---")

demo_reply_1 = generate_reply(historical past, "Clarify what this tradition GPT setup is doing in 5 bullet factors.")

print(wrap_text(demo_reply_1))

print("n--- Demo flip 2 ---")

demo_reply_2 = generate_reply(historical past, "search: agentic ai with native fashions")

print(wrap_text(demo_reply_2))

def interactive_chat():

print("nChat prepared. Sort 'exit' to cease.")

whereas True:

attempt:

user_msg = enter("nUser: ").strip()

besides EOFError:

break

if user_msg.decrease() in ("exit", "give up", "q"):

break

reply = generate_reply(historical past, user_msg)

print("nAssistant:n" + wrap_text(reply))

# interactive_chat()

print("nCustom GPT initialized efficiently.")We take a look at the whole setup by working demo prompts and displaying generated responses. We additionally create an non-compulsory interactive chat loop to converse immediately with the assistant. By the tip, we affirm that our customized GPT runs regionally and responds intelligently in actual time.

In conclusion, we designed and executed a customized conversational agent that mirrors GPT-style reasoning with out counting on any exterior companies. We noticed how native fashions might be made interactive via immediate orchestration, light-weight instrument routing, and conversational reminiscence administration. This strategy allows us to know the interior logic behind industrial GPT methods. It empowers us to experiment with our personal guidelines, behaviors, and integrations in a clear and absolutely offline method.

Take a look at the FULL CODES here. Be happy to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.