On this tutorial, we discover LitServe, a light-weight and highly effective serving framework that enables us to deploy machine studying fashions as APIs with minimal effort. We construct and check a number of endpoints that show real-world functionalities similar to textual content technology, batching, streaming, multi-task processing, and caching, all working regionally with out counting on exterior APIs. By the tip, we clearly perceive tips on how to design scalable and versatile ML serving pipelines which might be each environment friendly and straightforward to increase for production-level functions. Try the FULL CODES here.

!pip set up litserve torch transformers -q

import litserve as ls

import torch

from transformers import pipeline

import time

from typing import ListingWe start by organising our surroundings on Google Colab and putting in all required dependencies, together with LitServe, PyTorch, and Transformers. We then import the important libraries and modules that may permit us to outline, serve, and check our APIs effectively. Try the FULL CODES here.

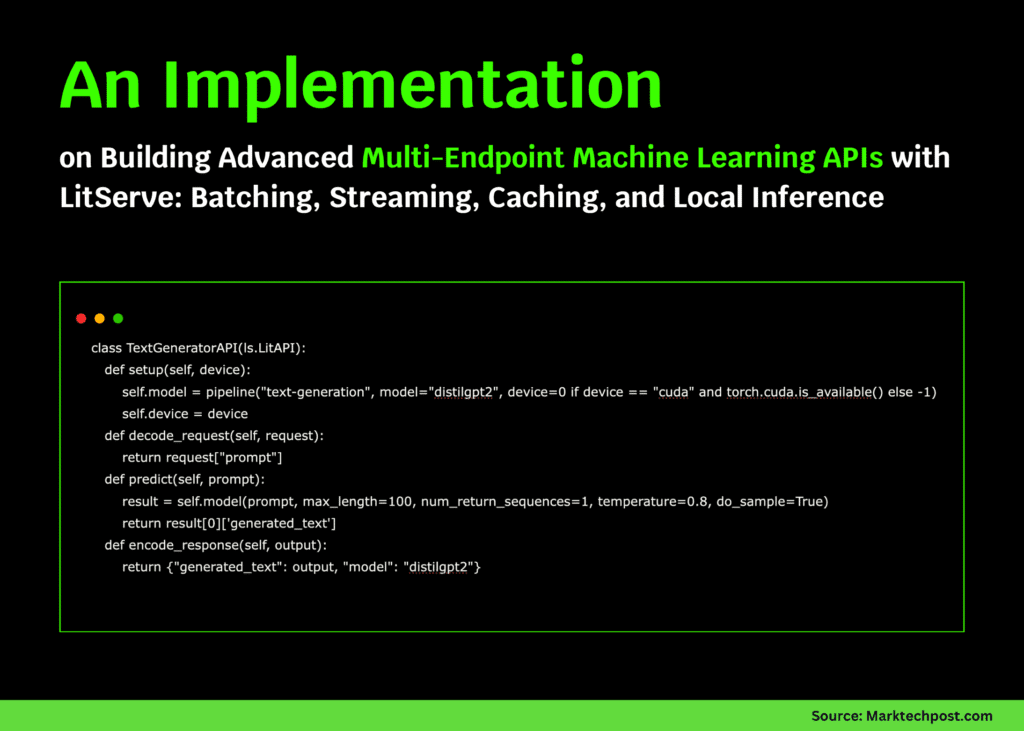

class TextGeneratorAPI(ls.LitAPI):

def setup(self, machine):

self.mannequin = pipeline("text-generation", mannequin="distilgpt2", machine=0 if machine == "cuda" and torch.cuda.is_available() else -1)

self.machine = machine

def decode_request(self, request):

return request["prompt"]

def predict(self, immediate):

consequence = self.mannequin(immediate, max_length=100, num_return_sequences=1, temperature=0.8, do_sample=True)

return consequence[0]['generated_text']

def encode_response(self, output):

return {"generated_text": output, "mannequin": "distilgpt2"}

class BatchedSentimentAPI(ls.LitAPI):

def setup(self, machine):

self.mannequin = pipeline("sentiment-analysis", mannequin="distilbert-base-uncased-finetuned-sst-2-english", machine=0 if machine == "cuda" and torch.cuda.is_available() else -1)

def decode_request(self, request):

return request["text"]

def batch(self, inputs: Listing[str]) -> Listing[str]:

return inputs

def predict(self, batch: Listing[str]):

outcomes = self.mannequin(batch)

return outcomes

def unbatch(self, output):

return output

def encode_response(self, output):

return {"label": output["label"], "rating": float(output["score"]), "batched": True}Right here, we create two LitServe APIs, one for textual content technology utilizing a neighborhood DistilGPT2 mannequin and one other for batched sentiment evaluation. We outline how every API decodes incoming requests, performs inference, and returns structured responses, demonstrating how simple it’s to construct scalable, reusable model-serving endpoints. Try the FULL CODES here.

class StreamingTextAPI(ls.LitAPI):

def setup(self, machine):

self.mannequin = pipeline("text-generation", mannequin="distilgpt2", machine=0 if machine == "cuda" and torch.cuda.is_available() else -1)

def decode_request(self, request):

return request["prompt"]

def predict(self, immediate):

phrases = ["Once", "upon", "a", "time", "in", "a", "digital", "world"]

for phrase in phrases:

time.sleep(0.1)

yield phrase + " "

def encode_response(self, output):

for token in output:

yield {"token": token}On this part, we design a streaming text-generation API that emits tokens as they’re generated. We simulate real-time streaming by yielding phrases one by one, demonstrating how LitServe can deal with steady token technology effectively. Try the FULL CODES here.

class MultiTaskAPI(ls.LitAPI):

def setup(self, machine):

self.sentiment = pipeline("sentiment-analysis", machine=-1)

self.summarizer = pipeline("summarization", mannequin="sshleifer/distilbart-cnn-6-6", machine=-1)

self.machine = machine

def decode_request(self, request):

return {"job": request.get("job", "sentiment"), "textual content": request["text"]}

def predict(self, inputs):

job = inputs["task"]

textual content = inputs["text"]

if job == "sentiment":

consequence = self.sentiment(textual content)[0]

return {"job": "sentiment", "consequence": consequence}

elif job == "summarize":

if len(textual content.break up()) < 30:

return {"job": "summarize", "consequence": {"summary_text": textual content}}

consequence = self.summarizer(textual content, max_length=50, min_length=10)[0]

return {"job": "summarize", "consequence": consequence}

else:

return {"job": "unknown", "error": "Unsupported job"}

def encode_response(self, output):

return outputWe now develop a multi-task API that handles each sentiment evaluation and summarization through a single endpoint. This snippet demonstrates how we are able to handle a number of mannequin pipelines by way of a unified interface, dynamically routing every request to the suitable pipeline primarily based on the required job. Try the FULL CODES here.

class CachedAPI(ls.LitAPI):

def setup(self, machine):

self.mannequin = pipeline("sentiment-analysis", machine=-1)

self.cache = {}

self.hits = 0

self.misses = 0

def decode_request(self, request):

return request["text"]

def predict(self, textual content):

if textual content in self.cache:

self.hits += 1

return self.cache[text], True

self.misses += 1

consequence = self.mannequin(textual content)[0]

self.cache[text] = consequence

return consequence, False

def encode_response(self, output):

consequence, from_cache = output

return {"label": consequence["label"], "rating": float(consequence["score"]), "from_cache": from_cache, "cache_stats": {"hits": self.hits, "misses": self.misses}}We implement an API that makes use of caching to retailer earlier inference outcomes, lowering redundant computation for repeated requests. We observe cache hits and misses in actual time, illustrating how easy caching mechanisms can drastically enhance efficiency in repeated inference eventualities. Try the FULL CODES here.

def test_apis_locally():

print("=" * 70)

print("Testing APIs Domestically (No Server)")

print("=" * 70)

api1 = TextGeneratorAPI(); api1.setup("cpu")

decoded = api1.decode_request({"immediate": "Synthetic intelligence will"})

consequence = api1.predict(decoded)

encoded = api1.encode_response(consequence)

print(f"✓ Outcome: {encoded['generated_text'][:100]}...")

api2 = BatchedSentimentAPI(); api2.setup("cpu")

texts = ["I love Python!", "This is terrible.", "Neutral statement."]

decoded_batch = [api2.decode_request({"text": t}) for t in texts]

batched = api2.batch(decoded_batch)

outcomes = api2.predict(batched)

unbatched = api2.unbatch(outcomes)

for i, r in enumerate(unbatched):

encoded = api2.encode_response(r)

print(f"✓ '{texts[i]}' -> {encoded['label']} ({encoded['score']:.2f})")

api3 = MultiTaskAPI(); api3.setup("cpu")

decoded = api3.decode_request({"job": "sentiment", "textual content": "Superb tutorial!"})

consequence = api3.predict(decoded)

print(f"✓ Sentiment: {consequence['result']}")

api4 = CachedAPI(); api4.setup("cpu")

test_text = "LitServe is superior!"

for i in vary(3):

decoded = api4.decode_request({"textual content": test_text})

consequence = api4.predict(decoded)

encoded = api4.encode_response(consequence)

print(f"✓ Request {i+1}: {encoded['label']} (cached: {encoded['from_cache']})")

print("=" * 70)

print("✅ All checks accomplished efficiently!")

print("=" * 70)

test_apis_locally()We check all our APIs regionally to confirm their correctness and efficiency with out beginning an exterior server. We sequentially consider textual content technology, batched sentiment evaluation, multi-tasking, and caching, making certain every part of our LitServe setup runs easily and effectively.

In conclusion, we create and run numerous APIs that showcase the framework’s versatility. We experiment with textual content technology, sentiment evaluation, multi-tasking, and caching to expertise LitServe’s seaMLess integration with Hugging Face pipelines. As we full the tutorial, we understand how LitServe simplifies mannequin deployment workflows, enabling us to serve clever ML techniques in just some strains of Python code whereas sustaining flexibility, efficiency, and ease.

Try the FULL CODES here. Be at liberty to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.