Yandex has launched ARGUS (AutoRegressive Generative User Sequential modeling), a large-scale transformer-based framework for recommender techniques that scales as much as one billion parameters. This breakthrough locations Yandex amongst a small group of worldwide know-how leaders — alongside Google, Netflix, and Meta — which have efficiently overcome the long-standing technical limitations in scaling recommender transformers.

Breaking Technical Obstacles in Recommender Programs

Recommender techniques have lengthy struggled with three cussed constraints: short-term reminiscence, restricted scalability, and poor adaptability to shifting person habits. Standard architectures trim person histories all the way down to a small window of latest interactions, discarding months or years of behavioral information. The result’s a shallow view of intent that misses long-term habits, delicate shifts in style, and seasonal cycles. As catalogs develop into the billions of things, these truncated fashions not solely lose precision but in addition choke on the computational calls for of personalization at scale. The end result is acquainted: stale suggestions, decrease engagement, and fewer alternatives for serendipitous discovery.

Only a few firms have efficiently scaled recommender transformers past experimental setups. Google, Netflix, and Meta have invested closely on this space, reporting good points from architectures like YouTubeDNN, PinnerFormer, and Meta’s Generative Recommenders. With ARGUS, Yandex joins this choose group of firms demonstrating billion-parameter recommender fashions in stay companies. By modeling total behavioral timelines, the system uncovers each apparent and hidden correlations in person exercise. This long-horizon perspective permits ARGUS to seize evolving intent and cyclical patterns with far higher constancy. For instance, as a substitute of reacting solely to a latest buy, the mannequin learns to anticipate seasonal behaviors—like mechanically surfacing the popular model of tennis balls when summer time approaches—with out requiring the person to repeat the identical alerts 12 months after 12 months.

Technical Improvements Behind ARGUS

The framework introduces a number of key advances:

- Twin-objective pre-training: ARGUS decomposes autoregressive studying into two subtasks — next-item prediction and suggestions prediction. This mixture improves each imitation of historic system habits and modeling of true person preferences.

- Scalable transformer encoders: Fashions scale from 3.2M to 1B parameters, with constant efficiency enhancements throughout all metrics. On the billion-parameter scale, pairwise accuracy uplift elevated by 2.66%, demonstrating the emergence of a scaling legislation for recommender transformers.

- Prolonged context modeling: ARGUS handles person histories as much as 8,192 interactions lengthy in a single go, enabling personalization over months of habits reasonably than simply the previous couple of clicks.

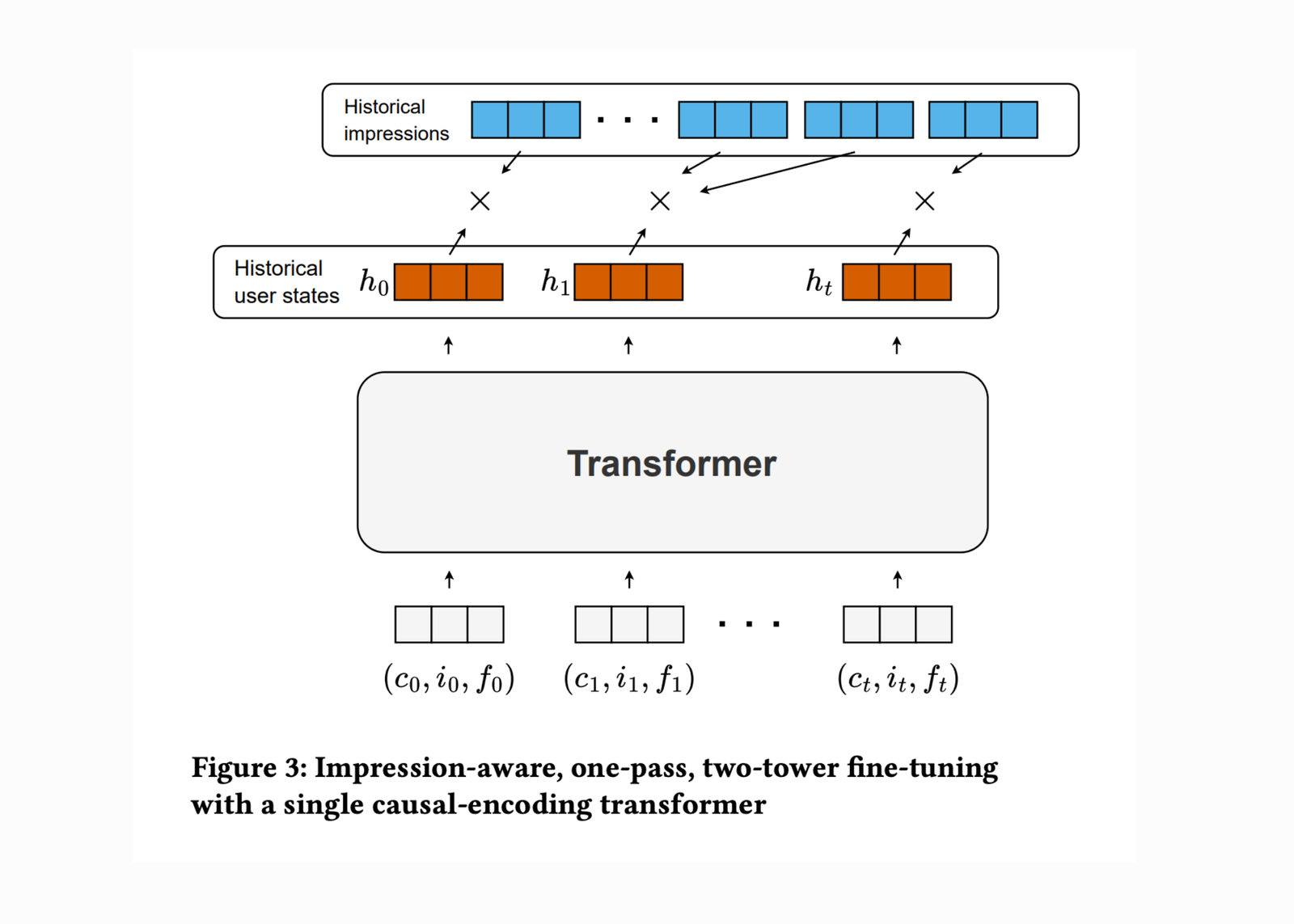

- Environment friendly fine-tuning: A two-tower structure permits offline computation of embeddings and scalable deployment, lowering inference price relative to prior target-aware or impression-level on-line fashions.

Actual-World Deployment and Measured Beneficial properties

ARGUS has already been deployed at scale on Yandex’s music platform, serving hundreds of thousands of customers. In manufacturing A/B assessments, the system achieved:

- +2.26% improve in whole listening time (TLT)

- +6.37% improve in like chance

These represent the most important recorded high quality enhancements within the platform’s historical past for any deep studying–based mostly recommender mannequin.

Future Instructions

Yandex researchers plan to increase ARGUS to real-time advice duties, discover characteristic engineering for pairwise rating, and adapt the framework to high-cardinality domains equivalent to massive e-commerce and video platforms. The demonstrated potential to scale user-sequence modeling with transformer architectures means that recommender techniques are poised to comply with a scaling trajectory much like pure language processing.

Conclusion

With ARGUS, Yandex has established itself as one of many few world leaders driving state-of-the-art recommender techniques. By overtly sharing its breakthroughs, the corporate isn’t solely bettering personalization throughout its personal companies but in addition accelerating the evolution of advice applied sciences for your complete business.

Take a look at the PAPER here. Due to the Yandex workforce for the thought management/ Assets for this text.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.